GigaFlow Server Sizing

Contents |

Front Matter

Notice

Every effort was made to ensure that the information in this manual was accurate at the time of printing. However, information is subject to change without notice, and VIAVI reserves the right to provide an addendum to this manual with information not available at the time that this manual was created.

Copyright

© Copyright 2024 VIAVI Solutions Inc.. All rights reserved. VIAVI and the VIAVI logo are trademarks of VIAVI Solutions Inc. ("VIAVI"). All other trademarks and registered trademarks are the property of their respective owners. No part of this guide may be reproduced or transmitted, electronically or otherwise, without written permission of the publisher.

Copyright release

Reproduction and distribution of this guide is authorized for US Government purposes only.

Trademarks

VIAVI is a trademark or registered trademark of VIAVI in the United States and/or other countries. Microsoft, Windows, Windows Server, and Microsoft Internet Explorer are either trademarks or registered trademarks of Microsoft Corporation in the United States and/or other countries.

Specifications, terms, and conditions are subject to change without notice. All trademarks and registered trademarks are the property of their respective companies.

Terms and Conditions

Specifications, terms, and conditions are subject to change without notice. The provision of hardware, services, and/or software are subject to VIAVI’s standard terms and conditions, available at https://www.viavisolutions.com/terms.

Version Control

| Version | Date | Author | Comments |

|---|---|---|---|

| 18.1.0.1 | 2020-08-20 | Kevin Wilkie | Initial version based on Matt Swains' Nitro Geo doc |

| 18.2.0.0 | 2020-09-23 | Kevin Wilkie | Style and layout update |

| 18.2.0.1 | 2020-10-15 | Niall McMahon | Style and layout update |

About This Document

This document replaces existing material in relation to the capabilities and sizing of GigaFlow on customer hardware. This document describes the hardware configurations and specifications of the equipment used by Viavi in the lab environment to validate performance of the software and can be used by a customer as an aid to deliver the required performance characteristics of any alternative vendor hardware selected for their specific implementation.

This document further expands upon those recommendations by describing the dimensioning models used to determine the amount of hardware required to support a given input traffic profile and output feature matrix.

With this enhanced document, used in conjunction with the GigaFlow Dimensioning Tool (GigaFlow Dimensioning Tool Spreadsheet) and Deployments GigaFlow Sizing Document, it is expected that the performance requirements for each component can be understood, modelled for the given customer deployment and feature configuration scenario and adapted to the selected hardware to correctly identify the required number of servers, interface and component specifications.

Sizing Tool

Server Sizing

This document aims to provide the engineer with sufficient information to "right-size" an installation. Although GigaFlow will run on almost any hardware that runs Java and PostgreSQL, we recommend investing in a system that exceeds the minimum requirements. This provides not only capacity to scale up the installation but also ensures a good end-user experience, e.g. faster reporting.

When sizing a server, consider the following:

- Ingest Rate: this is the cumulative number of flows per second (flows/s) being sent to the server.

- Device Count: this is the number of devices sending flows to the server.

- Hardware Available: this is the cumulative performance of CPU, RAM, Disk I/O and Disk Space.

- Storage Duration: data will be stored for this period of time.

- Reporting Performance: the queries that the user wants to run and the acceptable response time for requests.

- Features/Functionality: the features that will be enabled and at what scale.

Ingest Rate

This is the number of flows (Netflow/SFlow/JFlow/IPFIX/Syslog) parsed by GigaFlow; this is the largest scaling factor considered during deployment. The more flows/s that need to be ingested, the more work the server has to do. The more flows/s stored to disk increases the reporting time as more data is accessed.

The flows/s rate can be seen in the GigaFlow UI at System > System Status > Average Flows/s.

As well as the raw flow-rate, the make-up of the flows also has an effect, i.e. if the flows are from an internet-facing device, this can generate a large number of "Device/Source IP/Destination IP" tuples for the software to track. Even on small networks this can increase the RAM assigned to the GigaFlow process.

The number of tracked IP addresses can be seen in the GigaFlow UI at System > System Status > IPs. This shows how many IPs are currently being tracked in RAM. The window size of the IP cache. i.e. how long IPs are held in RAM for, is at System > System Status > IP Window.

Device Count

The device count is important as internally GigaFlow scales the processing of flows on a per-device basis. Devices are assigned to a CPU thread. The server must be suitably specified and configured to handle the device flows at ingest time without overloading any core.

The number of threads is set in System > Receivers > Netflow Process Threads button at the top right of the page. This should never be more than half the number of available cores on the server.

The table at the bottom of the System > Receivers page shows the number devices, type of data and stats on each CPU thread.

The device count is also important at report time as all tables are keyed by device. Including a device filter when running reports can give significant performance improvements.

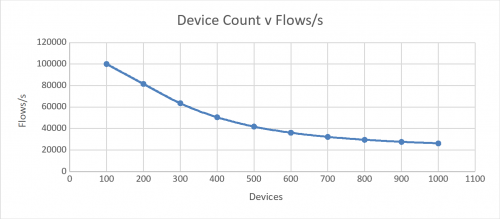

The following chart shows the relationship between device count and supported total number of flows recommended for a single GigaFlow instance.

Hardware Available

The minimum hardware recommended for GigaFlow to achieve the device/flow rates discussed must be at least equivalent to our Viavi GigaFlow appliance. This is:

- CPU: 2 X Intel Xeon Silver 4214 2.2GHz (12 core per CPU)

- RAM: 12x8GB=96 GB

- OS Drive: 2 X 960 GB SSD in RAID1 (804 GB formatted)

- RAID Controller: 9361-4i Single

- DATA Drive RAID: 10x8 TB SAS HDD = 80T (in RAID6), 58.2 TB after formatting

- GigaFlow Appliance estimated IOPS for data store (OS not included):

- 2K random read/write @ 4 KB and 64 KB

- Sequential read/write 2,500 MB/s (equivalent to 2.5k sequential IOPS @ 1024 KB, 10k sequential IOPS @ 256 KB)

- Average latency 4 ms (lower is better, especially important for network storage)

- Typically requires less then 100MB/s write and this depends very much on the flows/sec.

By Default, the server should use 8 cores for PostgreSQL queries, 5 cores for flow receivers and the remaining for the OS, main Java process and other processing requirements. RAM is split with a quarter for PostgreSQL, a quarter for GigaFlow and a half for OS file caching (PostgreSQL effective cache size settings).

Storage Duration

Storage duration, or how long to keep live data on the disks, has two principal impacts:

- The amount of disk space required should increase linearly with the number of days retention required, if the flow rate is stable.

- The number of tables to be maintained has an impact on house keeping duties such as rollups but impacts report creation as more tables have to be queried or pruned from a query.

By default, GigaFlow keeps data based on device-specific rollup schedules. For high-flow devices, tables will be created every hour. For smaller devices, they may be kept in at most 4-hour tables.

When data is older than the Forensics Rollup Age period - 4 days by default - the low-flow device tables will be grouped into single 24-hour duration tables; this helps to maintain a manageable total table count.

It is recommended that the table count be kept below 15,000 tables so that query planning does not become excessive. At 60,000 tables, reporting will fail and the retention period should be reduced. The expected table count based on the current device/flow rates can be found using the GigaFlow UI at System > Global > Storage > Max Forensics Storage.

Reporting Performance

This is impacted by the following factors:

- The filters applied.

- The volume of data to process.

- The number of returned results.

- The hardware available to deliver the report.

All reporting in GigaFlow is handled by the PostgreSQL database. It is for this reason that we give over most of the hardware resources to it so that reporting time can be as short as possible, delivering a good user experience, however, there will always be a trade-off between query performance, data volume and hardware costs.

Features Functionality

GigaFlow has a large number of configurable features and functions. The overall system performance depends on the configuration of these features and functions and the available hardware resources.

For this reason, it is difficult to predict the performance of a given server. Additionally, the flow profile will differ from server to server. However, we do know the hardware resources required for the different features and functions. The following table shows the top-line functionality in GigaFlow, how they are each affected by hardware resources, which particular factor(s) to be aware of and any recommended, enforced or unenforced, limits.

| Function | CPU | Memory | Disk | Variable Load Factors Outside of Device and Flow Count | Recommended Limit (May require more RAM) |

|---|---|---|---|---|---|

| Application Identification | Medium | Low | None | Number of additional applications defined (Complex or otherwise) | 50 |

| Deduplication | Medium | Low | None | Nothing configurable | N/A |

| IP Discovery | High | High | None | Number of unique IP addresses * how many devices they have been seen on | 1,000,000 per hour |

| Traffic Groups | Low | Low | None | Number of Traffic Groups | 2000 |

| First Packet Response | Medium | Low | None | Number of monitored subnets and services monitored |

10 subnets, 100 services per subnet |

| Watch-listing | High | Low | None | Number of denylists (blacklists) to monitor for | 30000 |

| Server Discovery | High | High | None | Devices and servers to monitor | N/A |

| Summary Builder | High | Low | Low | Number of device interfaces | 5000 |

| PTC Flow Creation | High | High | None | Number of (client, server, appId, device) tuples | 1/4 normal flow capacity |

| Syslog Flow Creation | High | High | None | Number of (client, server, appId, device) tuples | 1/4 normal flow capacity |

| Apex Flow Creation | High | High | High | Number of (client, server, appId, device) tuples | normal flow capacity |

| Performance Overviews | High | Low | Low | Nothing configurable | N/A |

| Performance Traffic Groups | High | Low | Low | Number of Traffic Groups | 2000 |

| Syn Monitoring | High | Low | None | Nothing configurable | N/A |

| Profiling | High | Low | None | Number and complexity of profiles | 100 |

| Flow Storage | Low | Medium | High | Nothing configurable | See Chart |

| User Mapping | High | Low | None | Number of monitored users | 10,000 |

| Syslog Parsing | High | None | None | Parser complexity and string length | Parser will inform user during configuration |