Difference between revisions of "Create script for AWS Lambda to migrate old VPC flow logs"

| Line 12: | Line 12: | ||

* Change the '''Lambda''' execution policies. | * Change the '''Lambda''' execution policies. | ||

You will also need to create an S3 bucket to receive the archived flow logs. | You will also need to create an S3 bucket to receive the archived flow logs. | ||

| + | |||

= Create the AWS '''Lambda''' function = | = Create the AWS '''Lambda''' function = | ||

| + | |||

'''1.''' Login to the AWS portal. | '''1.''' Login to the AWS portal. | ||

| + | |||

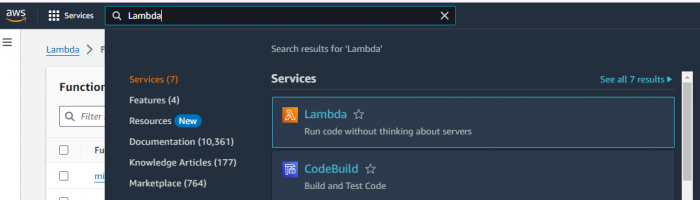

'''2.''' Search for the '''Lambda''' service and click the related result. | '''2.''' Search for the '''Lambda''' service and click the related result. | ||

<br />[[File:search_lambda.png|700px]] | <br />[[File:search_lambda.png|700px]] | ||

Revision as of 18:49, 17 April 2024

Contents |

This procedure makes use of a JS script and the AWS Lambda service to automatically migrate data from your live bucket (the one that collects your VPC flow logs) to an archive bucket (a bucket that will receive the old flow files using the script).

This script will let you move or delete old VPC flow logs as required by your organization. To do this, you will use an AWS Lambda script with the Node.js 16 JavaScript engine.

You will then set this script to run every hour using the instructions below. If you have more than 1000 new flow logs pre hour, then you may want to increase this execution rate, as only 1000 files will be processed in each run.

Before you proceed make sure that you have the correct AWS permissions to perform the following actions:

- Create Lambdas.

- Access S3 buckets.

- Change the Lambda execution policies.

You will also need to create an S3 bucket to receive the archived flow logs.

Create the AWS Lambda function

1. Login to the AWS portal.

2. Search for the Lambda service and click the related result.

3.