Observer GigaFlow Documentation

Table of Contents

- Legal

- Glossary

- Introduction

- About GigaFlow

- How-To Guide for GigaFlow

- First Steps

- PostgreSQL 16.1 upgrade (for GigaFlow version 18.19.0.0 thru 18.21.X.X)

- Managed PostgreSQL 16.1 upgrade (for GigaFlow version 18.22.2.0 and newer)

- Conducting Searches in GigaFlow

- Diagnostics and Reporting

- Explore Bandwidth Usage by Interface and Application

- Identify Bandwidth Usage by User

- Use First Packet Response to Understand Application Behaviour

- Determine if Bad Traffic is Affecting Your Network

- Create a Script

- Create a Site

- Create and Use a Profile

- Create an Integration

- Extract a GigaStor trace file

- Respond to a Blacklist Email Alert

- Configuration and System Set-up

- FAQs and Troubleshooting

- Reference Manual for GigaFlow

- GigaFlow Search

- Dashboards

- Enterprise Dashboard

- Server Overview

- Device Overview

- Interface Overview

- Interface Details

- Attributes and Tools

- Summary Interface Total Traffic

- Summary Interface In

- Summary Interface Out

- Summary Interface In Packets

- Summary Interface Out Packets

- Summary Interface In Flows

- Summary Interface Out Flows

- Summary Interface DSCP In Ingress Bytes

- Summary Interface DSCP Out Ingress Bytes

- Summary Interface DSCP In Egress Bytes

- Summary Interface DSCP Out Egress Bytes

- Application Overview

- Source IP Overview

- Destination IP Overview

- Sites Overview

- Top Sites Sources (Last Hour v Last Week Hour)

- Top Sites Destinations (Last Hour v Last Week Hour)

- Top Sites Sources (Last Hour v Week Last Week Hour)

- Top Sites Destinations (Last Hour v Week Last Week Hour)

- Summary Source Sites

- Summary Destination Sites

- Events

- My Current Queries

- My Complete Queries

- All Complete Queries

- All Current Forensics

- Canceled Queries

- Cluster Search

- DB Queries

- Forensics

- First Packet Response

- Ip Viewer

- NAT Reporting

- Network Audits

- Saved Reports

- Server Discovery

- Server Discovery/Detailed Information

- System Wide Reports

- SQL Reports

- Trace Extraction Jobs

- User Events

- Applications

- Existing Defined Service

- Existing Defined Application

- Existing Flow Objects

- Existing Protocol/Port Applications

- Attributes

- Cloud Services

- GEOIP

- Infrastructure Devices

- Detailed Device Information

- SNMP Settings

- SNMP Information Gathered

- Device SNMP Issues

- Attributes and Tools

- Storage Setting

- Other Settings

- Profiling

- Reporting

- New Report Link

- Import Report Link

- General

- Forensics Reports

- Existing DSCP Names

- New DSCP Name

- Existing Report Links

- Server Subnets

- Sites

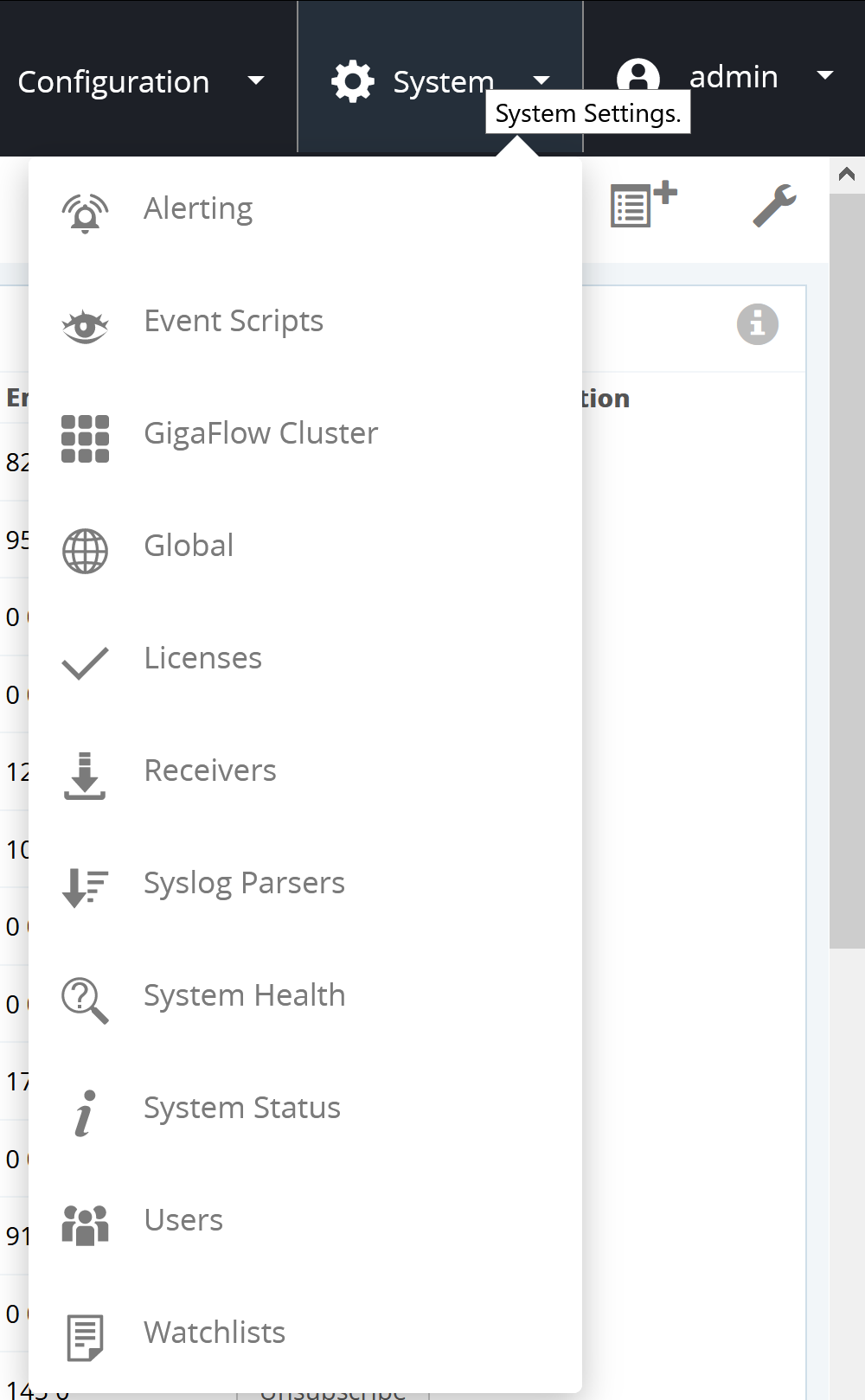

- Alerting

- Event Scripts

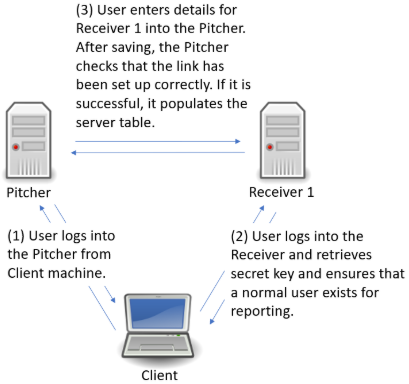

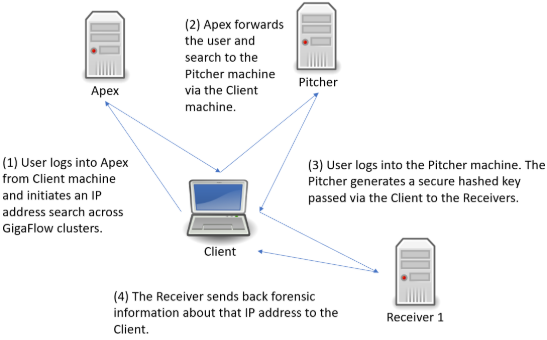

- GigaFlow Cluster

- This Server

- New Cluster Server

- Cluster Access

- Search the GigaFlow Cluster

- Encryption and GigaFlow Clusters

- Global

- General

- LDAP

- SSL

- Remote Services

- SNMP V2 Settings

- SNMP V3 Settings

- Log Setting

- Proxy Setting

- MAC Vendors

- Mail Settings

- Storage

- Data Retention and Rollup

- Integrations

- GigaStor

- Import/Export

- Licenses

- GigaFlow License

- 3rd Party Licenses

- Cleartext Communication

- Call Home Send

- Call Home Response

- Call Home Errors

- License

- Receivers

- Syslog Parsers

- System Health

- System Status

- Users

- Existing Users

- Add Local User

- Add User Group

- Existing User Groups

- Existing LDAP Groups

- Add LDAP User

- Add LDAP Group

- Existing LDAP Nested Groups

- Existing Portal Users

- Add Portal User

- Existing Data Access Groups

- Add Data Access Group

- Watchlists

- Forensic Report Types

- Application

- ASs by Dst

- ASs by Source

- ASs Pairs

- Address Pairs

- Addresses As Sources And Dest Port By Dest Count (Edit?)

- Addresses By Dest

- Addresses By Source

- All Fields

- All Fields Avg FPR

- All Fields Max FPR

- Application Flows

- Application Flows With User

- Applications With Flow Count

- Class Of Service

- Duration Avg

- Duration Max

- FW Event

- FW Ext Code

- Interface Pairs

- Interface By Dest

- Interfaces By Destination Pct

- Interfaces By Source

- Interfaces By Source Pct

- MAC Address Pairs

- MAC Addresses By Dest

- MAC Addresses By Source

- Ports As Dest By Dest Add Popularity

- Ports As Dest By Src Add Popularity

- Ports As Src By Dest Add Popularity

- Ports As Src By Src Add Popularity

- Ports By Dest

- Ports By Source

- Posture

- Protocols

- Servers As Dest With Ports

- Servers As Dst Address

- Servers AS Src Address

- Servers As Src With Ports

- Sessions

- Sessions Flows

- Sessions With Ints

- Subnet Class A By Dest

- Subnet Class A By Source

- Subnet Class B By Dest

- Subnet Class B By Source

- Subnet Class C By Dest

- Subnet Class C By Source

- Subnet Class C Destination By Dest IP Count

- Subnet Class C Source By Source IP Count

- TCP Flags

- Site By Dest

- Site By Source

- Site Pairs

- URLs

- USers (Report)

- TCP Flags

Observer GigaFlow Documentation

Documentation version 18.24.4.0 was released May 09, 2025.

Legal

Notice

Every effort was made to ensure that the information in this manual was accurate at the time of printing. However, information is subject to change without notice, and VIAVI Solutions reserves the right to provide an addendum to this manual with information not available at the time that this manual was created.

Copyright

© Copyright 2025 VIAVI Solutions. All rights reserved. VIAVI and the VIAVI logo are trademarks of VIAVI Solutions Inc. ("VIAVI"). All other trademarks and registered trademarks are the property of their respective owners. No part of this guide may be reproduced or transmitted, electronically or otherwise, without the written permission of the publisher.

Terms and Conditions

Specifications, terms, and conditions are subject to change without notice. The provision of hardware, services, and/or software are subject to VIAVI standard terms and conditions, available at www.viavisolutions.com/terms. Specifications, terms, and conditions are subject to change without notice. All trademarks and registered trademarks are the property of their respective companies.

Glossary

- AES: the Advanced Encryption Standard, also known by its original name Rijndael, is a specification for the encryption of electronic data established by the U.S. National Institute of Standards and Technology (NIST) in 2001. See Advanced Encryption Standard at Wikipedia for more.

- (An) Application: is an abstract entity within the Application Layer that specifies the shared communications protocols and interface methods used by a particular Flow Object on your network.

- Application Layer: An application layer is an abstraction layer that specifies the shared communications protocols and interface methods used by hosts in a communications network. The application layer abstraction is used in both of the standard models of computer networking: the Internet Protocol Suite (TCP/IP) and the OSI model. Although both models use the same term for their respective highest level layer, the detailed definitions and purposes are different. See application layer at Wikipedia for more.

- Appid: the application ID is a unique identifier for each application. In GigaFlow, Appid is a positive or negative integer value. The way in which the Appid is generated depends on which of the 3 ways the application is defined within the system. Following the hierarchy outlined in Configuration > Profiling -- Apps/Options -- Defined Applications, a negative unique integer value is assigned if (1) the application is associated with a Profile Object or (2) if it is named in the system. If the application is given by its port number only (3), a unique positive integer value is generated that is a function of the lowest port number and the IP protocol.

- ARP: the Address Resolution Protocol is a protocol used by the Internet Protocol (IP) to map IP network addresses to the hardware (MAC) addresses used by a data link protocol. Although a Layer 2 protocol, ARP is used by routers in Layer-3 as opposed to Layer-2 switching, where CAM tables are used. See Address Resolution Protocol.

- Alert: is a GigaFlow record that is created when a monitored flow pattern matches certain criteria. Alerts are usually triggered by unwanted behaviour on the nework. See System > Alerting.

- Allowed: in GigaFlow, an allowed flow pattern is one that will not cause an exception, i.e. trigger an alert. This is used in in GigaFlow's Profiler. See Configuration > Profiling for more.

- Base DN: or Base Distinguished Name. In the LDAP directory, each object has a unique path to its place in the directory. This path is the DN. The base DN is the start part of this LDAP path. See Lightweight Directory Access Protocol at Wikipedia for more.

- Blacklist: this is a list of known malevolent or likely malevolent IP addresses, compiled from online threat lists.

- CAM: a content-addressable memory (CAM) table is the usual implementation of a MAC table, more correctly called a forwarding information base (FIB). A CAM table is a dynamic list of MAC addresses and their corresponding ports. The CAM table is used to enable Layer-2 switching, i.e. direct connections between machines within a LAN at the Layer-2 level. The CAM table is closely associated with the ARP table. See forwarding information base at Wikipedia for more.

- Class of Service (COS): Class of service is a parameter used in data and voice protocols to differentiate the types of payloads contained in the packet being transmitted. See class of service at Wikipedia for more.

- Client: a client is a piece of computer hardware or software that accesses a service made available by a server, in this case GigaFlow. See client (computing) at Wikipedia for more.

- Context (SNMP): SNMP contexts provide VPN users with a secure way of accessing MIB (management information base) data. See Cisco for more.

- Deduplication: In computing, data deduplication is a specialized data compression technique for eliminating duplicate copies of repeating data. See data deduplication at Wikipedia for more.

- Destination IP Address: the destination IP address is the intended receiver of information in a computer-to-computer communication.

- Device: see Infrastructure Device.

- Domain Controller: On Microsoft Servers, a domain controller (DC) is a server computer that responds to security authentication requests (logging in, checking permissions, etc.) within a Windows domain. See domain controller at Wikipedia for more.

- DSCP: or Type of Service. "The type of service (ToS) field in the IPv4 header has had various purposes over the years, and has been defined in different ways by five RFCs. The modern redefinition of the ToS field is a 8-bit differentiated services field (DS field) which consists of a 6-bit Differentiated Services Code Point (DSCP) field and a 2-bit Explicit Congestion Notification (ECN) field". See type of service at Wikipedia for more.

- DNS: the Domain Name System is the naming system used by all devices connected to the Internet. See Domain Name System at Wikipedia for more.

- Entry: an entry flow pattern in GigaFlow is a flow pattern used to define a Profile to be tested against an Allowed profile. This concept is used in GigaFlow's Profiler tool. See Configuration > Profiling for more.

- Exceptions: this is the flow records that have not matched the Allowed Flow record template.

- (GigaFlow) Event: is a GigaFlow record that is created when a monitored flow pattern matches certain criteria. Some things that will trigger an event record include:

- Attempts to access blacklisted resources.

- Profile exceptions, i.e. behaviours deviating from norms.

- A SYN flood event.

- A lost neighbour.

- A new connected device sending flows.

- A connected device that stops sending flows.

See Dashboards > Events for more.

- Flow: a flow is a unique stream of packets in one direction. Cisco standard NetFlow version 5 defines a flow as a unidirectional sequence of packets that all share the following 7 values:

- Ingress interface (SNMP ifIndex).

- Source IP address.

- Destination IP address.

- IP protocol.

- Source port for UDP or TCP, 0 for other protocols.

- Destination port for UDP or TCP, type and code for ICMP, or 0 for other protocols.

- IP Type of Service.

(Taken from Wikipedia.)

GigaFlow supports NSEL, sFlow v5, jFlow, IPFIX, Netflow v5 and Netflow v9.

- Flow Object: this is the abstract grouping of a series of flows that can be called by the profiler. Flow Objects are defined in the Profiler. See Configuration > Profiling for more.

- Flow Posture: if a flow creates a black-list or profile event, an event or alert flow record is created.

- Flow Record Fields in GigaFlow with Java types:

- customerid integer: stores the site source identifier.

- device numeric(39,0): stores the numeric IPV6 address of the device sending us the flow/syslog records.

- engineid integer: stores the site destination identifier.

- srcadd numeric(39,0): stores the numeric IPV6 address of the source for the traffic in this record.

- dstadd numeric(39,0): stores the numeric IPV6 address of the destination for the traffic in this record.

- nexthop numeric(39,0): stores the numeric IPV6 address of the nexthop for the traffic in this record.

- inif integer: SNMP ifIndex of the input interface that seen the traffic for this flow.

- outif integer: SNMP ifIndex of the output interface that seen the traffic for this flow.

- pkts bigint: number of packets transmitted in this flow.

- bytes bigint: number of octets/bytes transmitted in this flow.

- firstseen bigint: millisecond timestamp of when this flow started.

- duration bigint: millisecond duration of this flow.

- srcport integer: source port number for traffic in this flow record.

- dstport integer: destination port number for traffic in this flow record.

- flags integer: TCP flags as an integer value.

- proto integer: IP Protocol number for this flow record.

- tos integer: IP TOS/COS value for this flow record.

- appid integer: assigned application ID; the lowest of src/dst port number.

- srcas integer: source AS number used for this flow.

- dstas integer: destination AS number used for this flow.

- userid text COLLATE pg_catalog."default": user ID for this flow, may be as sent or inferred from other sources.

- userdomain text COLLATE pg_catalog."default": user domain for this flow, may be as sent or inferred from other sources.

- srcmac bigint: source MAC address (Java long value), either as supplied or inferred from other sources.

- dstmac bigint: destination MAC address (Java long value), either as supplied or inferred from other sources.

- postureid integer: marking to indicate this flow is of interest (due to blacklist or profiling problems).

- spare integer: used to store the first packet response value. -1 = unset, -2 = no response in scope.

- url text COLLATE pg_catalog."default": free text field; used for application names or URL data.

- fwextcode integer: additional field used to identify traffic (from Cisco NSEL).

- fwevent integer: additional field used to identify events (from Cisco NSEL).

- FlowSec: see Secflow.

- Hash function: a hash function is any function that can be used to map data of arbitrary size to data of a fixed size. Hash functions are used to uniquely identify secret information, for example user login data. See hash function at Wikipedia for more.

- Hits: in GigaFlow, this is the number of flow records that have matched a particular filter, rule or Allowed profile.

- (SNMP) IfIndex: From Cisco - "One of the most commonly used identifiers in SNMP-based network management applications is the Interface Index (ifIndex) value. IfIndex is a unique identifying number associated with a physical or logical interface. For most software, the ifIndex is the name of the interface".

- Infrastructure Device: is any computing machine connected to your network.

- IP Address: an Internet Protocol address (IP address) is a numerical label assigned to each device connected to a computer network that uses the Internet Protocol for communication. An IP address serves two principal functions: host or network interface identification and location addressing. See IP address at Wikipedia for more.

- LAN: A local area network (LAN) is a computer network that interconnects computers within a limited area such as a residence, school, laboratory, university campus or office building. See local area networkat Wikipedia.

- Layer-2 (L2): in the seven-layer OSI model of computer networking, the data link layer is Layer-2. This layer is the protocol layer that transfers data between adjacent network nodes in a wide area network (WAN) or between nodes on the same local area network (LAN) segment. See network layer at Wikipedia for more.

- Layer-3 (L3): in the seven-layer OSI model of computer networking, the network layer is Layer-3. The network layer is responsible for packet forwarding including routing through intermediate routers. The TCP/IP Internet Layer is a subset of the OSI Network Layer. See network layer at Wikipedia for more.

- LDAP: the Lightweight Directory Access Protocol is an open, vendor-neutral, industry standard application protocol for accessing and maintaining distributed directory information services over an Internet Protocol (IP) network. A common use of LDAP is to provide a central place to store usernames and passwords. This allows many different applications and services to connect to the LDAP server to validate users. See Lightweight Directory Access Protocol Wikipedia for more.

- Lost Neighbour: a monitored IP address that disappears.

- MAC Address: the media access control address of a device is a unique identifier assigned to a network interface controller (NIC) for communications at the data link layer of a network segment. See MAC address> at Wikipedia for more.

- MD5: or Message-Digest-5 algorithm is a hash function designed by Ronald Rivest at MIT. See hash function.

- Nashorn: is a JavaScript engine developed in the Java programming language by Oracle. GigaFlow uses Nashorn to power its JS functionality. See Nashorn (JavaScript engine) at Wikipedia and Oracle Nashorn at Oracle for more.

- Network Address Translation (NAT): is a method of remapping one IP address space into another by modifying network address information in the IP header of packets while they are in transit across a traffic routing device. See Network address translation at Wikipedia for more.

- NetFlow: is a feature that was introduced on Cisco routers around 1996 that provides the ability to collect IP network traffic as it enters or exits an interface. GigaFlow supports NSEL, sFlow v5, jFlow, IPFIX, Netflow v5 and Netflow v9. See also Flow. See NetFlow at Wikipedia for more.

- Poller: A Poller is a collection of devices and template management information base (MIB) variables configured in the LAN management solution (LMS) to monitor the utilization and availability levels of devices that are connected to the network. (Cisco).

- PostgreSQL: GigaFlow uses PostgreSQL for its databasing. See PostgreSQL for more.

- Profile Monitoring: GigaFlow actively monitors flows on your network and matches patterns against flow Profiles created using the Profiler tool. See Configuration > Profiling for more.

- Profiler: GigaFlow allows the creation of an abstract profile. A profile is a flow object that is itself a combination of flow objects. A profile defines an acceptable flow pattern for your network. This concept is used in GigaFlow's Profiler tool. See Configuration > Profiling for more.

- Router: a router is a networking device that forwards data packets between computer networks. A router is primarily a Layer-3 device. See router (computing) at Wikipedia for more.

- Sample Rate (Netflow): some devices, especially older ones, can send sFlow and jFlow in a sampled format. GigaFlow supports sampled data, re-sampling to the correct rate. By default, if the sample rate is contained in the flow record, GigaFlow will use this to resample. If the sample rate is not contained in the flow record, it can be set manually for the device.

To manually set the sample rate for a device, go to Configuration > Infrastructure Devices > Detailed Device Information and Storage Settings.

- Secflow records: a portmanteau of "security" and "flow". Used to denote flow records that indicate a GigaFlow security event.

- SHA: or Secure Hash Algorithm, developed by the NSA. See hash function.

- SIEM: In the field of computer security, security information and event management (SIEM) software products and services combine security information management (SIM) and security event management (SEM). They provide real-time analysis of security alerts generated by applications and network hardware. See Security information and event management at Wikipedia.

- SNMP: Simple Network Management Protocol (SNMP) is an Internet Standard protocol for collecting and organizing information about managed devices on IP networks and for modifying that information to change device behavior. Devices that typically support SNMP include cable modems, routers, switches, servers, workstations, printers, and more. See Simple Network Management Protocol

- Source IP Address: the source IP address is the sender of information in a computer-to-computer communication.

- SQL: Structured Query Language, used to interact with the PostgreSQL database. You can find a complete SQL manual at the PostgreSQL website.

- SYN: to establish a connection, TCP uses a three-way handshake. Before a client attempts to connect with a server, the server must first bind to and listen at a port to open it up for connections: this is called a passive open. Once the passive open is established, a client may initiate an active open. To establish a connection, the three-way (or 3-step) handshake occurs:

- SYN: The active open is performed by the client sending a SYN to the server. The client sets the segment's sequence number to a random value A.

- SYN-ACK: In response, the server replies with a SYN-ACK. The acknowledgment number is set to one more than the received sequence number i.e. A+1, and the sequence number that the server chooses for the packet is another random number, B.

- ACK: Finally, the client sends an ACK back to the server. The sequence number is set to the received acknowledgement value i.e. A+1, and the acknowledgement number is set to one more than the received sequence number i.e. B+1.

At this point, both the client and server have received an acknowledgment of the connection. The steps 1, 2 establish the connection parameter (sequence number) for one direction and it is acknowledged. The steps 2, 3 establish the connection parameter (sequence number) for the other direction and it is acknowledged. With these, a full-duplex communication is established. See Transmission Control Protocol at Wikipedia.

- SYN Flood: a SYN flood is a form of denial-of-service attack in which an attacker sends a succession of SYN requests to a target's system in an attempt to consume enough server resources to make the system unresponsive to legitimate traffic. See SYN flood at Wikipedia.

- Syslog: is a standard for message logging. It allows separation of the software that generates messages, the system that stores them, and the software that reports and analyzes them. Each message is labeled with a facility code, indicating the software type generating the message, and assigned a severity level. See syslog at Wikipedia.

- User: depends on context. The User can be a person authorized to log into your GigaFlow system. See System > Users for more. User can also refer to people authorized to access devices on your network.

- VLAN: or Virtual LAN. "A VLAN is a group of devices on one or more LANs that are configured to communicate as if they were attached to the same wire, when in fact they are located on a number of different LAN segments ... VLANs define broadcast domains in a Layer 2 network". See Understanding and Configuring VLANs at Cisco and Virtual LAN at Wikipedia.

- Watchlists: refers to both blacklists and the opposite, whitelists.

Introduction

This Document

This document consists of two main parts: (i) the Observer GigaFlow How-To Guide and (ii) the Reference Manual for the user interface.

How-To Guide

The How-To Guide contains useful work-throughs for many common GigaFlow tasks.

Reference Manual

This is the official reference manual for the GigaFlow UI, included with every distribution. The Reference Manual provides detailed explanations for each GigaFlow function acccessible through the user interface.

GigaFlow Wiki

The GigaFlow Wiki contains additional detailed material including useful scripts, detailed installation instructions and frequently updated notes. Check here if you have not found what you are looking for in the How-To Guide or the Reference Manual.

About GigaFlow

GigaFlow is Viavi's Netflow collection and monitoring platform. GigaFlow helps you regain control of your IT network, giving you network-based security and application assurance.

From a performance perspective, GigaFlow allows you to:

- Troubleshoot your network using First Packet Response, GigaFlow's network response time tool.

- Audit network applications and infrastucture devices.

- Ensure that application performance is in line with Service level agreements (SLAs).

- Easily monitor network traffic and understand utilization by user and application, i.e. determine where your network traffic is going and who, or what, is using up bandwidth on expensive links.

- Report and filter every session by multiple parameters.

- Integrate with multiple-session reporting technologies.

From a security perspective, GigaFlow allows you to:

- Monitor URLs and network connections.

- Prevent connections to known bad IP addresses.

- Allow known IP addresses.

- Prevent visibility blindspots.

- View logged-in users.

- Ensure that users do not access files or servers outside of set permission levels.

- View connected USB devices.

- Monitor network traffic for unusual patterns using flow profiles that you define, with alerts when exceptions occur, e.g. if a user logs into a machine they have never used before or if traffic is recorded from a never-before-seen machine.

- Identify points of incursion or exfiltration on your network.

- Identify the end users or devices associated with an event.

GigaFlow's benefits include:

- Complete stitched IT forensic evidence.

- No additional network hardware; device management is non-intrusive.

- Threat identification is "always-on".

GigaFlow's core components are:

- Front End

- Apache Velocity Templating Engine: used for all front end page layout.

- JavaScript: provides automatic updating of`the user interface (UI) and polling for new data. Integrates more than 25 open source projects.

- Bootstrap: provides UI components.

- Theme: look and feel provided by out development team's experience.

-

Back End

- Eclipse-Temurin Java 17 (OpenJDK): supported and installed by GigaFlow versions 18.16.0.0 and newer.

- PostgreSQL Database 11 - for versions 18.16.0.0 to 18.18.0.0: required to support partitioning.

- PostgreSQL Database 16.1 - for versions 18.19.0.0 and newer: required to support partitioning.

- JavaScript Engine (Nashorn): required for scripting engine.

GigaFlow supports NSEL, sFlow v5, jFlow, IPFIX, Netflow v5 and Netflow v9.

How-To Guide for GigaFlow

First Steps

Install GigaFlow on a Windows Platform

GigaFlow requires Eclipse-Temurin Java 17 - OpenJDK and PostgreSQL Database 11 (for versions 18.16.0.0 to 18.18.0.0).

GigaFlow requires Eclipse-Temurin Java 17 - OpenJDK and PostgreSQL Database 16.1 (for versions 18.19.0.0 and newer).

The recommended platform for GigaFlow is Windows Server 2016, English Edition. Installation must be performed using a local administrator account, not a domain administrator account.

GigaFlow will install:

- Eclipse-Temurin Java 17 (OpenJDK) - for versions 18.16.0.0 and newer

- PostgreSQL Database 11 - for versions 18.16.0.0 to 18.18.0.0

- PostgreSQL Database 16.1 - for versions 18.19.0.0 and newer

- The Gigaflow Service.

NOTE: Due to changes in Oracle licensing, VIAVI has replaced Oracle Java in GigaFlow v18.16.0.0. Please note that while this new GigaFlow release no longer uses Oracle Java, the installation procedure will not remove the Oracle Java runtime software since other applications may still depend upon it. If you wish to uninstall Oracle Java, please refer to the instructions found in Removing Oracle Java.

Legacy GigaFlow versions 18.15.0.0 or older require the use of Oracle Java version 1.8_202 JRE (Java Runtime Environment), which may require a payment of additional license fees to Oracle. If you continue to use these legacy GigaFlow versions, please check directly with Oracle regarding licensing requirements/fees for Oracle Java.

When complete, a new service called GigaFlow will be running and enabled when the server starts up. On running the GigaFlow installer, the installation proceeds as follows:

1. This is the GigaFlow Setup welcome page:

Click Next to continue.

2. On the next page, you can choose whether or not to install start menu shortcuts:

Click Next to continue.

3. The next page displays the GigaFlow End User License Agreement (EULA); you must agree to the terms of the EULA to continue the installation or to use the product.

Click I Agree to continue.

4. On the next page, you are reminded of the system requirements for GigaFlow:

Click OK to continue.

5. After accepting the license agreement and acknowledging the reminder, you can define:

- The ports that GigaFlow will listen on for the product UI.

- How much RAM the product can use. This does not include PostgreSQL requirements.

- The location of the GigaFlow database. The path name must not include spaces.

Click Next to continue.

6. Select a location for the GigaFlow application files. Again, the path name must not include spaces.

Click Install to continue.

7. When the installation is complete, you can click Show Details to see what the installer did.

Click Next to continue.

8. The installation is now finished. With the Run GigaFlow tick box checked, the installer will open the GigaFlow User Interface in your default browser when you click Finish.

9. Your browser will open at the GigaFlow login page. The default credentials are:

- Username: admin

- Password: admin

Below the login panel, you can see your GigaFlow installation version details.

10. After logging in for the first time, the Quick Start Settings page appears. You are prompted here to configure the minimal essential settings for GigaFlow to start working properly:

- Server Name is your name for this server. It does not have to be the same as the server hostname. The Server Name will appear in the web browser title bar.

- Drive to Monitor is the local drive that you want to monitor, for example:

D:/Data. - Minimum Free Space is the minimum free space that you want to maintain on disk before purging old data. This is typically 10% of your total disk space.

- Listener Ports allows you to define a new port, by filling-in the port details and clicking the + icon.

- Existing Listener Ports provides the list of existing listener ports available. Here you can see if these ports are receiving flows or syslogs.

- SNMP V2 Settings and SNMP V3 Settings allow adding SNMP v2 and v3 communities.

Note that you can also see and configure all these settings separately from the System menu, or by clicking the links provided next to each setting name.

If you want to stop seeing the Quick Start Setting page each time you log in, enable the option Do Not Show Quick Start On Startup. This setting is also available from System > Global.

Click Save when finished.

You are ready to use GigaFlow.

See the GigaFlow Wiki for further installation notes.

Log In and Provision GigaFlow

Logging In

Figure: GigaFlow's login screen

Log in to GigaFlow from any browser using your credentials. GigaFlow will open on the Dashboards welcome screen.

See Log in in the Reference Manual.

After logging in for the first time, the Quick Start Settings page appears. You are prompted to configure the minimal essential settings for GigaFlow to start working.

You can also configure the rest of the settings as explained below.

Give the Server a Name

Enter a name for your GigaFlow server. This does not have to be the hostname. This will be used in the title bar and when making calls back to the Viavi home server.

See System > Global in the Reference Manual for more. You can change the server name at any time by navigating to System > Global > General.

Assign the Disk to Monitor

Specify the local drive that you want to monitor, for example: D:/Data.

See also System > Global in the Reference Manual for more. You can change the storage settings at any time by navigating to System > Global > Storage.

Assign Disk Space

Enter how much free space to keep on disk before forensic data is aged out. This is the minimum free space to maintain on your disk before forensic data is overwritten. The default value is 20 GB.

See also System > Global in the Reference Manual for more. You can change the storage settings at any time by navigating to System > Global > Storage.

Define Listener Ports

Add new listener ports, by filling-in the port details and clicking the + icon.

See also System > Receivers in the Reference Manual for more. You can change the storage settings at any time by navigating to System > Receivers.

Enable Call Home Functionality

Enable GigaFlow's call home functionality by configuring the proxy server, if one exists, at System > Global> Proxy. This information is required for several reasons: (i) to allow GigaFlow to call home and register itself; (ii) to let us know that your installation is healthy and working; (iii) to update blacklists. All calls home are in cleartext. See System > Licences for more. The required information is:

- Server ID. This is the unique server ID; it is sent in calls home.

- Proxy address. Leave blank to disable proxy use.

- Proxy port, e.g. 80.

- Proxy user.

- Proxy password.

- Click ? to test the proxy server.

- Click Save

.

.

See also System > Global in the Reference Manual for more.

Install License

You must enter a valid license key to use GigaFlow. Enter your license key in the text box at the end of the table at System > Licenses. Click ADD to submit.

See also System > Licenses in the Reference Manual.

Enable Blacklists

To begin monitoring events on your network, subscribe to one or more blacklists at System > Watchlists.

See also System > Watchlists in the Reference Manual.

Enable SNMP

To poll your network infrastructure devices, you must provide GigaFlow with SNMP community strings and authentication details. You can do this at System > Global.

SNMP V2 Settings

Enter the community name in the text box and click Save.

SNMP V3 Settings

- Enter a user name.

- Select the Authentication Type. Choose one of the following secure hash functions:

- MD5

- SHA

- HMAC128SHA224

- HMAC192SHA256

- HMAC256SHA384

- HMAC384SHA512.

- Enter an authentication type password.

- Select the Privacy Type. Choose one of the following encryption standards:

- AES-128

- AES-192

- AES-256

- AES-192-3DES

- AES-256-3DES

- DES

- 3DES.

- Enter a privacy password.

- Enter a context. SNMP contexts provide VPN users with a secure way of accessing MIB data. See Cisco and the Glossary for more.

- Click the Save button.

See also System > Global in the Reference Manual.

Enable and Test First Packet Response

First Packet Response (FPR) is a useful diagnostic tool, allowing you to compare the difference between the first packet time-stamp of a request flow and the first packet time-stamp of the corresponding response flow from a server. By comparing the FPR of a transaction with historical data, you can troubleshoot unusual application performance.

To begin using First Packet Response, you must specify the server subnets that you would like to monitor. Navigate to Configuration > Server Subnets.

Select the Server Subnets tab and enter a server subnet of interest. To add a new server subnet:

- Enter the subnet address.

- Enter the subnet mask.

- Click Add Server Subnet.

- Click Cancel to clear the data entered.

By clicking on the Servers tab, you can view a list of the identified servers that will be monitored. You can see a realtime display of the First Packet Response by clicking to enable the ticker beside each server.

By clicking on the Devices tab, you can view a list of infrastructure devices - routers - associated with the server subnets of interest.

Reports for all active monitored servers can be viewed at Reports > First Packet Response.

See also Use First Packet Response to Understand Application Behaviour in the How-to section and Configuration > Server Subnets and Reports > First Packet Response in the Reference Manual for more.

Cleanup for a fresh installation of GigaFlow

If you need to properly uninstall GigaFlow in order to prepare your machine for a fresh installation, then refer to the following procedures depending on your operating system.

Cleanup a Windows machine

Cleanup for a machine with a GigaFlow version before 18.22.X.X

To cleanup your machine, perform the following steps:

- In the GigaFlow installation folder, access the Flow folder.

- Run the uninstall.exe file, and follow the uninstall wizard instructions.

As an alternative, you can also uninstall the NSIS GigaFlow application fromControl Panel\All Control Panel Items\Programs and Features . - There are 2 registry entries which need to be deleted. In the administrator command prompt shell, run the following commands:

-

reg delete "HKEY_LOCAL_MACHINE\SOFTWARE\WOW6432Node\NSIS_AnuViewFlow" /f -

reg delete "HKEY_LOCAL_MACHINE\SOFTWARE\WOW6432Node\AnuViewSoftware" /f

-

Note: To only uninstall PostgreSQL run the uninstall-postgresql.exe file in the

Cleanup for a machine with GigaFlow version 18.22.X.X and newer

To cleanup your machine, perform the following steps:

- In the GigaFlow installation folder, access the Flow folder.

- Run the uninstall.exe file, and follow the uninstall wizard instructions.

As an alternative, you can also uninstall the NSIS GigaFlow or Observer GigaFlow (if your GigaFlow version is 18.24.0.0 or newer) application fromControl Panel\All Control Panel Items\Programs and Features . - There are 2 registry entries which need to be deleted. In the administrator command prompt shell, run the following commands:

-

reg delete "HKEY_LOCAL_MACHINE\SOFTWARE\WOW6432Node\NSIS_AnuViewFlow" /f -

reg delete "HKEY_LOCAL_MACHINE\SOFTWARE\WOW6432Node\AnuViewSoftware" /f

-

Cleanup a Linux machine

Cleanup for a machine with a GigaFlow version before 18.22.X.X

To cleanup your machine, perform the following steps:

- Open a terminal prompt with suitable permissions.

- To uninstall PostgreSQL, perform the following steps:

- To list the PostgreSQL packages, run the following command:

rpm -qa | grep postgres - For each of the above packages, run the following command:

rpm -e <postgres_package_name>

- To list the PostgreSQL packages, run the following command:

- To uninstall GigaFlow, run the following command:

rm -fr /opt/ros

Cleanup for a machine with GigaFlow version 18.22.X.X and newer

To cleanup your machine, perform the following steps:

- Open a terminal prompt with suitable permissions.

- To uninstall GigaFlow, perform the following steps:

- To list the GigaFlow packages, run the following command:

rpm -qa | gigaflow - For each of the above packages, run the following command:

rpm -e <gigaflow_package_name>

- To list the GigaFlow packages, run the following command:

PostgreSQL 16.1 upgrade (for GigaFlow version 18.19.0.0 thru 18.21.X.X)

Upgrading to PostgreSQL 16.1 on Windows

The following procedure helps you upgrade to a GigaFlow that contains the PostgreSQL 16.1 version.

This procedure assumes the following default locations for PostgreSQL 16.1:

- PostgreSQL 16.1 install folder:

<GF_Install_Dir>\Flow\postgesql16 - PostgreSQL 16.1 data folder:

<GF_Install_Dir>\Flow\postgesql16\data .

If neccesary, then please modify these locations accordingly.

Note: For data migration from PostgreSQL 11 (or a version earlier than PostgreSQL 16.1) to PostgreSQL 16.1, the data folders must reside on the same drive.

Backup the PostgreSQL data

The upgrade process to PostgreSQL 16.1 requires to migrate the data in the old cluster version to the new major version. This happens outside the GigaFlow installation and to prevent data loss it is highly recommended to backup the original data. The following command will dump the existing PostgreSQL data to an SQL file that is used to restore the data in case of upgrade failures. In the administrator command prompt shell, run the following command:

<GF_Install_Dir>\Flow\postgresql\bin\pg_dump -E UTF8 --host=127.0.0.1 -U myipfix -f <GF_Install_Dir>\GigaFlowBackup.sql

Note: To restore data that was previously saved using the

Install GigaFlow with PostgreSQL 16.1

- Follow the Windows installation procedure to install GigaFlow: Install GigaFlow on a Windows Platform.

Note: This will not install the latest PostgreSQL 16.1 version.

Install PostgreSQL version 16.1

- Use the PostgreSQL Windows installer to install PostgreSQL version 16.1.:

<GF_Install_Dir>\Flow\dist\postgresql-16.1-1-windows-x64.exe - Change the install folder to

<GF_Install_Dir>\Flow\postgresql16 . - Change the data folder to

<GF_Install_Dir>\Flow\postgresql16\data .Note: The data folder for PostgreSQL 16.1 must reside in the same drive as that of the previous PostgreSQL version.

- Enter the PostgreSQL password: P0stgr3s_2ME.

- Accept the port 5433 for the new PostgreSQL version 16.1.

- Unmark the Stackbuilder option from the component list.

Note: The old cluster does not need to be deleted.

Caution: If PostgreSQL 11 is used for GigaFlow and other applications, then do not upgrade to PostgreSQL 16.1. This will upgrade the old cluster to the newer version and make it unusable with PostgreSQL 11 version.

Caution: If GigaFlow is set to connect to a remote PostgreSQL installation, then do not upgrade to PostgreSQL 16.1.

Note: You can ignore the following message: The system cannot find the file specified.

Note: Let the migration script to run until completion. The completion of this process requires a time period from 10 minutes to several hours, depending on the size of the data that is migrated. When the data migration is successful the message Data migration complete is displayed.

Data migration to the new PostgreSQL version

- Run the postgres_migration.bat script from the

<GF_Install_Dir>\Flow\resources\docs\sql folder. - On the successful completion of the script, connect to the server and make sure that the data is correct.

Upgrading to PostgreSQL 16.1 on Linux

Server with direct internet access

If the server has internet access, then you can run the following command, which will download the latest build and install it (causing GigaFlow service to restart):

Upgrading to PostgreSQL 16.1

- The upgrade process to PostgreSQL 16.1 requires to migrate the data in the old cluster version to the new major version. This happens outside the GigaFlow installation and to prevent data loss it is highly recommended to backup the original data. The following command will dump

the existing PostgreSQL data to an SQL file that is used to restore the data in case of upgrade failures. In the terminal prompt, run the following command:

pg_dump -E UTF8 --host=127.0.0.1 -U myipfix -f GigaFlowBackup.sql - For GigaFlow 18.19.0.0. and newer, run the following script to upgrade to PostgreSQL 16.1:

/opt/ros/resources/unix/ObserverGigaFlow_Upgrade_PG16.sh 11 Note: The parameter 11 represents the PostgreSQL version that it upgrades from.

Note: The old cluster does not need to be deleted.

Caution: If PostgreSQL 11 is used for GigaFlow and other applications, then do not run the ObserverGigaFlow_Upgrade_PG16.sh script. This will upgrade the old cluster to the newer version and make it unusable with PostgreSQL 11 version.

Caution: If GigaFlow is set to connect to a remote PostgreSQL installation, then do not run the ObserverGigaFlow_Upgrade_PG16.sh script.

Server without direct internet access

- If the server has no internet access, then copy the build file ObserverGigaFlowUnixx64_rpm.tgz into the server

folder /opt and then run the command:

tar -vxzf /opt/ObserverGigaFlowUnixx64_rpm.tgz -C / - Restart the GigaFlow service.

Upgrading to PostgreSQL 16.1

- The upgrade process to PostgreSQL 16.1 requires to migrate the data in the old cluster version to the new major version. This happens outside the GigaFlow installation and to prevent data loss it is highly recommended to backup the original data. The following command will dump

the existing PostgreSQL data to an SQL file that is used to restore the data in case of upgrade failures. In the terminal prompt, run the following command:

pg_dump -E UTF8 --host=127.0.0.1 -U myipfix -f GigaFlowBackup.sql - For GigaFlow 18.19.0.0. and newer, run the following script to upgrade to PostgreSQL 16.1:

/opt/ros/resources/unix/ObserverGigaFlow_Upgrade_PG16.sh 11 Note: The parameter 11 represents the PostgreSQL version that it upgrades from.

Note: The old cluster does not need to be deleted.

Caution: If PostgreSQL 11 is used for GigaFlow and other applications, then do not run the ObserverGigaFlow_Upgrade_PG16.sh script. This will upgrade the old cluster to the newer version and make it unusable with PostgreSQL 11 version.

Caution: If GigaFlow is set to connect to a remote PostgreSQL installation, then do not run the ObserverGigaFlow_Upgrade_PG16.sh script.

Managed PostgreSQL 16.1 upgrade (for GigaFlow version 18.22.2.0 and newer)

Starting with GigaFlow version 18.22.2.0 and newer, the GigaFlow installer will no longer include a separate PostgreSQL installer. Instead, the PostgreSQL binaries are now bundled directly within the GigaFlow installer. Specifically, PostgreSQL version 16.1 is included with GigaFlow version 18.22.2.0. The PostgreSQL service operates on the dedicated port 26906 and is registered under the service name GFPostgresSvc. This change simplifies the installation process because it eliminates the need for a separate PostgreSQL installation.

Upgrading from a release prior to GigaFlow version 18.19.0.0

If you are upgrading GigaFlow from a release prior to version 18.19.0.0 that is running a PostgreSQL version less than 16, then the system will be upgraded to PostgreSQL version 16.1. Additionally, your old data will automatically be migrated to this new version.

For details, refer to the Data Migration section.

Upgrading from GigaFlow version 18.19.0.0 release and newer

There are 3 possible upgrade scenarios:

- PostgreSQL version 16.1 was installed with GigaFlow version 18.19.0.0 or newer, and old data was migrated to PostgreSQL version 16.1.

- PostgreSQL version 16.1 was installed with GigaFlow version 18.19.0.0 or newer, but the old data was not migrated to PostgreSQL version 16.1. In this case, PostgreSQL 16.1 was initiated with a new cluster.

- PostgreSQL version 16.1 was not installed. In this case, GigaFlow continues to run with the old PostgreSQL version due to its backward compatibility.

Note: For this scenario the behavior is the same as upgrading from a GigaFlow version prior to 18.19.0.0. For details, refer to the Data Migration section.

Note: The newly managed PostgreSQL database will not support external applications that use the database. If this is your situation, then proceeding with the installation will delete the databases created by the external applications.

In scenarios 1 and 2, when you install GigaFlow version 18.22.2.0, GigaFlow will be set up with a managed PostgreSQL version 16.1. The existing PostgreSQL service will be deregistered, and a new PostgreSQL service

named

Note: The new PostgreSQL service will utilize the same data folder as the previous one. This entire process will be transparent to the user, ensuring a seamless transition.

Data Migration

Locally stored PostgreSQL 11 databases will be migrated to PostgreSQL 16.1 automatically. Depending on the size of your database the migration process can take a long time to complete. The newly managed PostgreSQL database will not support external applications using the database. If this is your situation, then migrate your data as needed.

To estimate the size of the database, perform the following steps:

- Login to GigaFlow.

- In the GigaFlow UI, go to the System > Global > Storage tab and note the path from the Data Drive To Monitor field.

- For Windows installations:

- Browse the file system and right-click on the folder from the Data Drive To Monitor path.

- Select the Properties option and note the Size on disk information.

- For Linux installations:

- Login to the system using SSH secure protocol.

- Run the following command:

du -sh /path/to/directory Note: The part

/path/to/directory represents the path found in the Data Drive To Monitor field. - Note the size of the directory.

- For Windows installations:

The duration of the data migration process can be shortened by decreasing the retention period of the forensics data (see Reducing the size of the GigaFlow database prior to installation section).

Note: You must complete this process before installing GigaFlow.

The following table provides guidance for data migration times on Windows. Your times may vary depending on numerous factors.

| Data Size | Data Migration Time |

|---|---|

| ~6 TB | 10 min |

| ~12 TB | ~2 h |

The following table provides guidance for data migration times on Linux. Your times may vary depending on numerous factors.

| Data Size | Data Migration Time |

|---|---|

| ~5 TB | 3 min |

Reducing the size of the GigaFlow database prior to installation

To reduce the size of the GigaFlow database, perform the following steps:

- Login to GigaFlow.

- In the GigaFlow UI, go to the System > Global > Storage tab.

- In the Max Forensics Storage (Days) field, decrease the number of days for your data to be stored.

Note: Make sure that the number of days in the Max Forensics Storage (Days) field is larger than the one in the Min Forensics Storage (Days) field. - Click the Save Storage Settings button.

To validate that the storage size is smaller and within acceptable limits, repeat the steps in the Estimating the database size section.

Note: You can restore the number of storage days to their previous configuration after the installation of GigaFlow 18.22.2.0 or newer is complete.

Windows upgrade

First go to https://update.viavisolutions.com/latest/, download the file ObserverGigaFlowSetupx64.exe and run it.

During the installation of GigaFlow 18.22.2.0 or newer, you will be prompted with different dialogs depending on what version you are upgrading from and different possible scenarios.

If multiple PostgreSQL versions are detected, then GigaFlow will also prompt you with the following screen:

With respect to the scenarios presented in section Upgrading from GigaFlow version 18.19.0.0 release and newer, select the options as follows:

- PostgreSQL 16 option for scenarios 1 and 2

- PostgreSQL 11 option for scenario 3.

Note: For scenario 3, the setup will install GigaFlow and the managed PostgreSQL version 16.1 followed by an automatic data migration.

Windows data migration

When PostgreSQL 11 option is selected for scenario 3, or you are upgrading from a version prior to GigaFlow 18.19.0.0, the following dialog regarding Data Migration is shown:

If you are concerned about the duration of the data migration process, do not click the Next button. Instead, click the Cancel button to exit the setup and follow the steps in the Estimating the database size section. If this is not your case, then click the Next button to continue the installaion process.

Data migration is monitored every 30 seconds and is displayed in a separate command window. A new logged entry is displayed every 30 seconds until the data migration process is completed. This status window will automatically close at that time.

Logging

Data migration is logged in the file

How to change the PostgreSQL data folder after installation

To change the PostgreSQL data folder on Windows, perform the following steps:

- In Registry Editor, modify the following registry key entry:

HKLM\SYSTEM\CurrentControlSet\Services\postgresql-x64-11\ImagePath

Change the -D option in the value of the key to the new folder, for example:"c:\GigaFlow\Flow\postgresql\bin\pg_ctl.exe" runservice -N "postgresql-x64-11" -D "D:\New_Data_Folder" -w - For GigaFlow version 18.23.0.0 and newer, also modify the following registry key entries to the New_Data_Folder:

HKEY_LOCAL_MACHINE\SOFTWARE\WOW6432Node\AnuViewSoftware\Flow\postgresqlData HKEY_LOCAL_MACHINE\SOFTWARE\WOW6432Node\AnuViewSoftware\Flow\sqlData

Linux upgrade

This procedure is valid for both Oracle Linux 8 and RedHat Enterprise Linux 8.

First go to https://update.viavisolutions.com/latest/ and download the tar file ObserverGigaFlowUnixx64_rpm.tgz.

To untar the file, run the following command:

The tar file contains the following files:

- gigaflow_deps.rpm which contains tools for debugging.

- gigaflow.rpm which contains the GigaFlow binary.

- ObserverGigaFlow_Install.sh that is a wrapper script which helps with the installations.

Clean Install

To install GigaFlow on a new Linux box, run the script using the following command:

This will install the dependencies and the GigaFlow software, and will start the new PostgreSQL version 16.1 service GFPostgresSvc on port 26906.

At the end of the installation output to terminal ignore the following error message:

ERROR: extension "plpgsql" already exists

The default data folder for the managed PostgreSQL on Linux is /opt/ros/pgsql/data. If the default data location needs to be changed, run the following command:

<new_data_folder_path>

The GFPostgresSvc service will start using the new data folder. The default data location will be linked to the new folder, so no other changes are needed.

How to change the PostgreSQL data folder after installation

The default data folder for the managed PostgreSQL on Linux is /opt/ros/pgsql/data. If the default data location needs to be changed, run the following command:

<new_data_folder_path>

The GFPostgresSvc service will start using the new data folder. The default data location will be linked to the new folder, so no other changes are needed.

Upgrade

Before upgrading GigaFlow, make sure that the postgresql service is running.

For upgrades from GigaFlow versions 18.21.0.0 and prior, run the following command:

This will upgrade GigaFlow to the latest version 18.22.2.0. A managed PostgreSQL service GFPostgresSvc is started that will run on port 26906.

If the previous version of PostgreSQL is less than 16 the old data is migrated to the new version. Refer to the Data Migration section for data migration times and steps to reduce the time. The file /tmp/gigaflow/dm.log displays the progress message Data Migration in Progress during the data migration process.

Note: If the previous version of PostgreSQL is already on version 16, then the new GFPostgresSvc service will use the same data folder and with the default data folder /opt/ros/pgsql/data linked to it.

Logging

For the logging information of the installation process, refer to the file /tmp/gigaflow/gigaflow.log.

Remote PostgreSQL

If GigaFlow is installed and connected to a PostgreSQL running remotely, then no data migration is performed by the installation process. You need to do the data migration manually.

The installation process will update GigaFlow and start the managed PostgreSQL locally on port 26906.

Note: GigaFlow version 18.22.2.0 will be the last release that supports remote PostgreSQL.

The same message as above is displayed on the Linux terminal when the ObserverGigaFlow_Install.sh script is run with the upgrade parameter.

Multiple Applications using the GigaFlow PostgreSQL installation

If applications other than GigaFlow have databases in the same PostgreSQL service that is used by GigaFlow, and a data migration is performed, then only the GigaFlow database will be migrated causing the other databases to be deleted. If this is detected, then the following warning shows.

The same message as above is displayed on the Linux terminal when the ObserverGigaFlow_Install.sh script is run with the upgrade parameter. You will have the choice to continue the installation process or to exit.

Troubleshooting a failed PostgreSQL migration

Windows troubleshooting

The Windows installer for GigaFlow 18.22.2.0 which contains the GigaFlow managed PostgreSQL binaries version 16.1 will perform the data migration if the version on the system that is upgraded is prior than 16.1. This is different from the process related to version 18.21.0.0 and prior, where you needed to manually install PostgreSQL 16.1 from a PostgreSQL Windows installer in the dist folder.

PostgreSQL service status

Make sure that the PostgreSQL service is running before you start the installation, since the scripts use the running service to query the data folder and the version.

To do this in Windows, open the services panel and check the state of the service. If the PostgreSQL service is not running or cannot start, then you need to fix this before you begin the installation process.

You can use the Windows Event Log to determine the root cause. The log folder in the PostgreSQL data folder has log files for each day which might contain useful information.

Selecting the correct PostgreSQL version during installation

If the installer detects multiple versions of PostgreSQL in the HKLM\System\CurrentControlSet\Services registry entry, then it will prompt the user to select the one used for GigaFlow.

If the wrong version is selected, then the installer fails with an error message since it will try to initialize PostgreSQL data but the folder is not empty. In this case abort the installation and retry.

Data migration on Windows

The GigaFlow installer will perform the data migration as part of the installation process. If the data migration fails, then refer to the <GigaFlow_InstallDir>\pgdm.log file for the last successful step.

Check if any of the following cases apply to your failure scenario:

- Access to the necessary folders could not be granted for pg_upgrade.

- Open the <GigaFlow_InstallDir>\permission.log file and search for the keyword Failed. Run the following command in Command Prompt:

findstr "Failed processing" <GigaFlow_InstallDir>\permission.log - All the Failed entries should report 0. If this is not the case, then perform the following step:

- Right-click the related folder, select the Properties option and grant permissions manually. The security tab will show if the current user has Full Access to the folder.

- Open the <GigaFlow_InstallDir>\permission.log file and search for the keyword Failed. Run the following command in Command Prompt:

- The pg_upgrade step has the check flag failed.

- For all the errors that occurr during the pg_upgrade refer to the log files found in the pg_upgrade_output.d folder in the data folder of the new cluster. Check the correctness of the folder paths in the file <GigaFlow_InstallDir>\pg_upgrade_params.txt

- The pg_upgrade_internal.log file will contain the reason for the failure.

The most common error is that it cannot create a hard link. This can occur if the data folder for the old and the new clusters are not in the same drive. - The PostgreSQL bin and data directories are read from the following registry entries. If these entries are incorrect, then edit and correct them, and retry the data migration.

HKLM SYSTEM\CurrentControlSet\Services\postgresql-x64-11 "ImagePath"HKLM SYSTEM\CurrentControlSet\Services\postgresql-x64-16 "ImagePath"

Once the issue is resolved you must run the data migration from the Command Prompt in Administrator mode as follows:

<GigaFlow_InstallDir>\resources\docs\sql\startDataMigration.bat <GigaFlow_InstallDir>\pg_upgrade_params.txt After the data migration process is successfully completed, run the following commands:

net start GFPostgresSvc <GigaFlow_InstallDir>\resources\docs\sql\postgresinstall.bat <GigaFlow_InstallDir> sc config GigaFlow depend= "" net start GigaFlow <GigaFlow_InstallDir>\resources\docs\sql\addDependency.bat

- If the pg_upgrade completed successfully but the message Data Migration Complete is not printed on the screen (not very common), then perform the following steps:

- In the new PostgreSQL cluster data folder, open the postgresql.conf file and search for port. If the port is not 26906, then change it to this value.

- Start the GFPostgresSvc service from the services panel.

- Change the postgresql-x64-11 service to manual from the services panel.

- Start the GigaFlow service.

- If the GigaFlow log file reports incorrect password for the user myipfix (not very common), then perform the following steps:

- Stop the GigaFlow service.

- Stop the GFPostgresSvc service.

- In the new PostgreSQL cluster data folder, open the pg_hba.conf file and at the bottom change all scram-sha-256 entries to trust.

- Restart the GFPostgresSvc service.

- Open the Command Prompt window as administrator and run the following command:

C:\GigaFlow\Flow\resources\docs\sql\postgresinstall.bat - Stop the GFPostgresSvc service

- In the data folder, pg_hba.conf file, replace trust with scram-sha-256.

- Restart the GFPostgresSvc service.

- Restart the GigaFlow service.

Linux troubleshooting

If the installation or the data migration process fails, then check the logs in the /tmp/gigaflow folder. The gigaflow.log file contains the installation logs.

If the data migration fails, then refer to the log files from the pg_upgrade_output.d folder present in the /opt/ros/pgsql/data folder.

The /tmp/gigaflow/ folder will have a file named pg_upgrade_check.sh which has the bin and data folder paths of the old and new PostgreSQL. Make sure that the paths are correct.

After the issue is fixed, run the following commands to restrat the data migration process:

su - postgres < /tmp/gigaflow/pg_upgrade_check.sh su - postgres < /tmp/gigaflow/pg_upgrade.sh systemctl start GFPostgresSvc su - postgres < /opt/ros/resources/unix/altermyipfixdb.sh

Conducting Searches in GigaFlow

Search for Security Events Associated with an IP Address

To find out more about a particular IP address:

- Enter an IP address in the main GigaFlow Search box at the top of the screen.

- Click Go.

- Select a reporting period and click Submit .

Click on Events tab in the panel on the left side of the screen.

The infographics and tables display all the security events associated with that IP address during the reporting period.

See Search and Events in the Reference Manual for more.

Conduct a Search and Apply a Macro or Script

See Reports > System Wide Reports > Device Connections and Reports > System Wide Reports > MAC Address Vendors in the Reference Manual to find out get a list of MAC addresses associated with your network.

Search for a MAC Address

See Search in the Reference Manual for more.

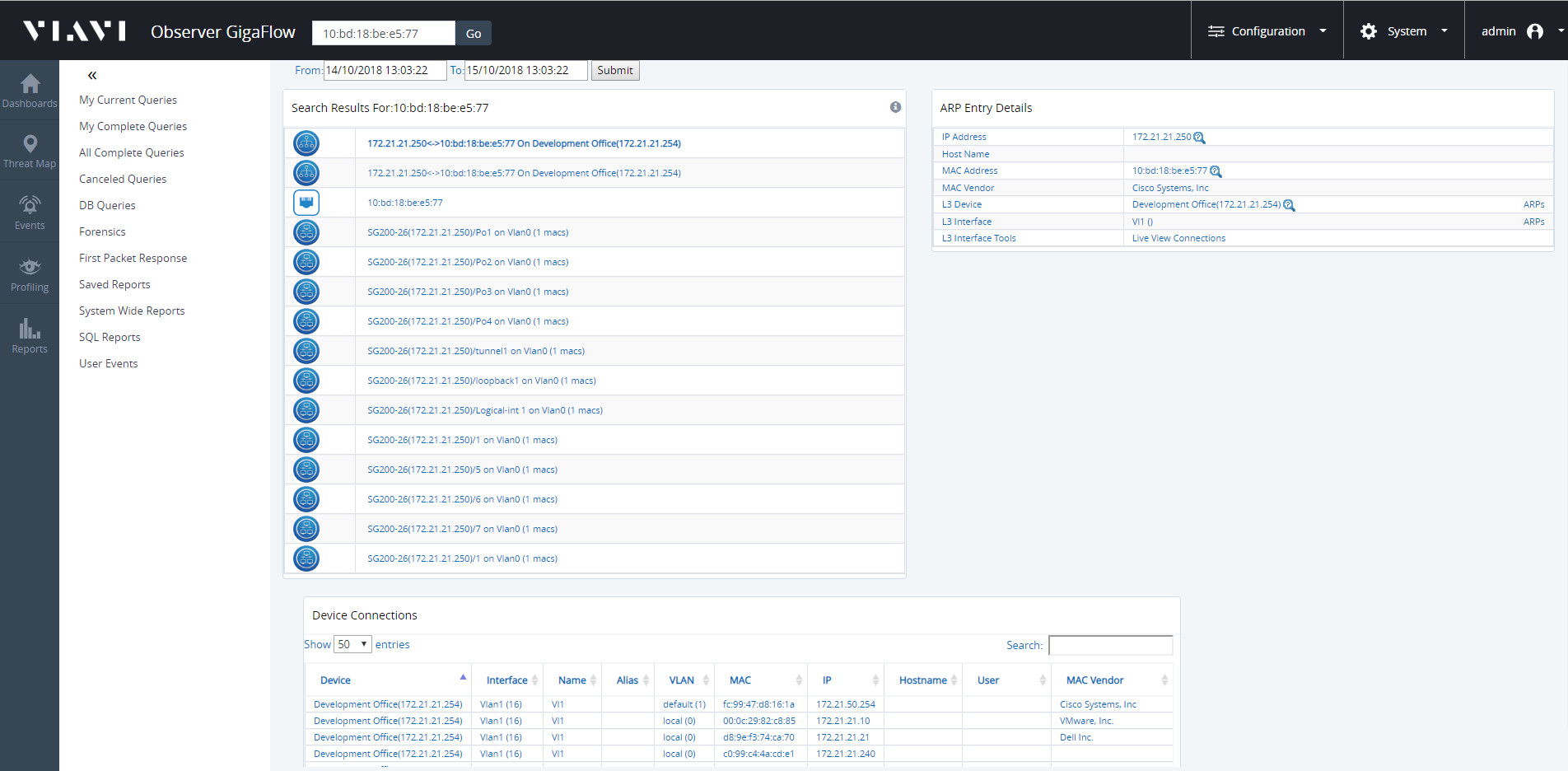

Search for a MAC address to see what device it is connected to and the VLAN it is in:

- Enter the entire MAC address into the search box.

- You must enter the full address.

- GigaFlow's MAC address search works for any standard MAC address format.

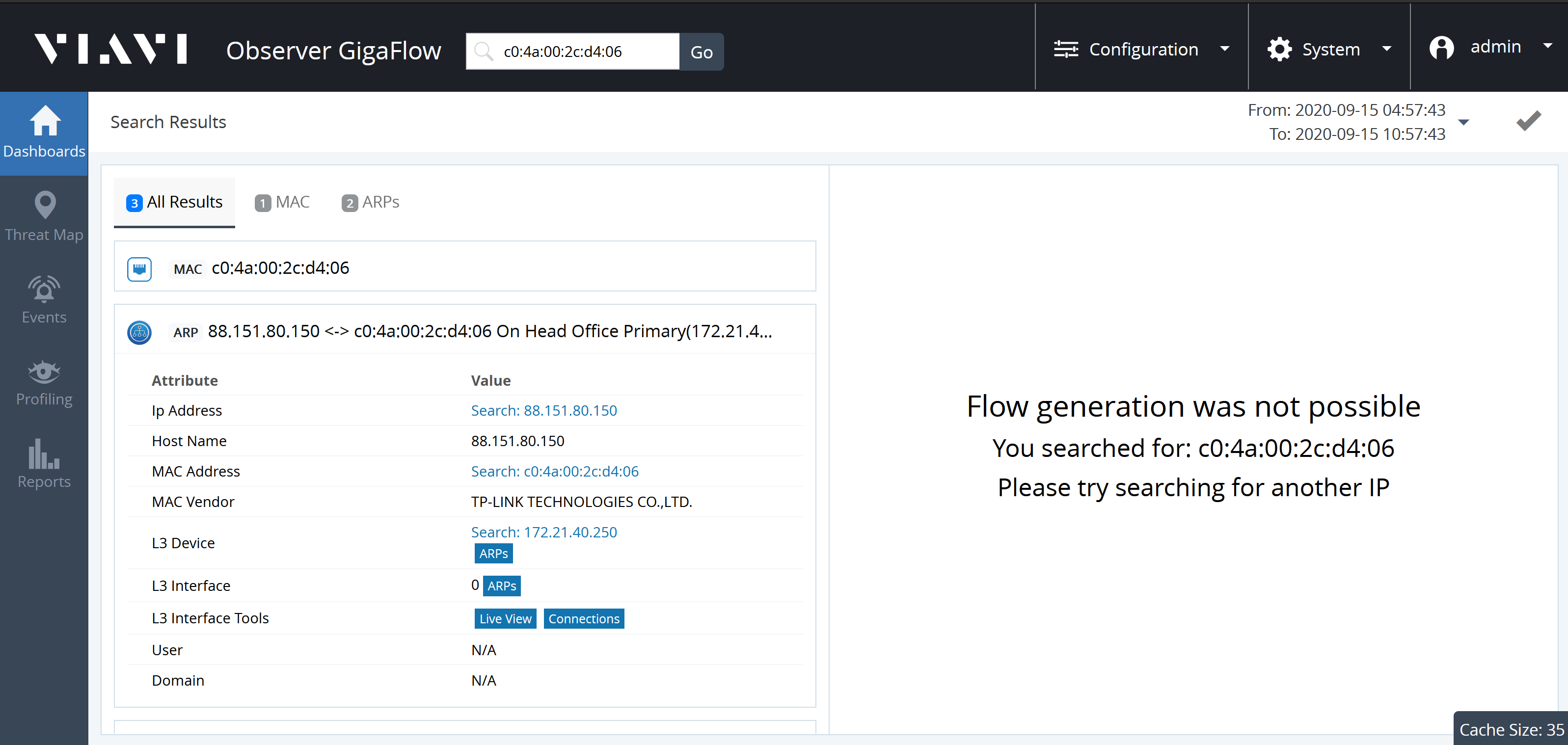

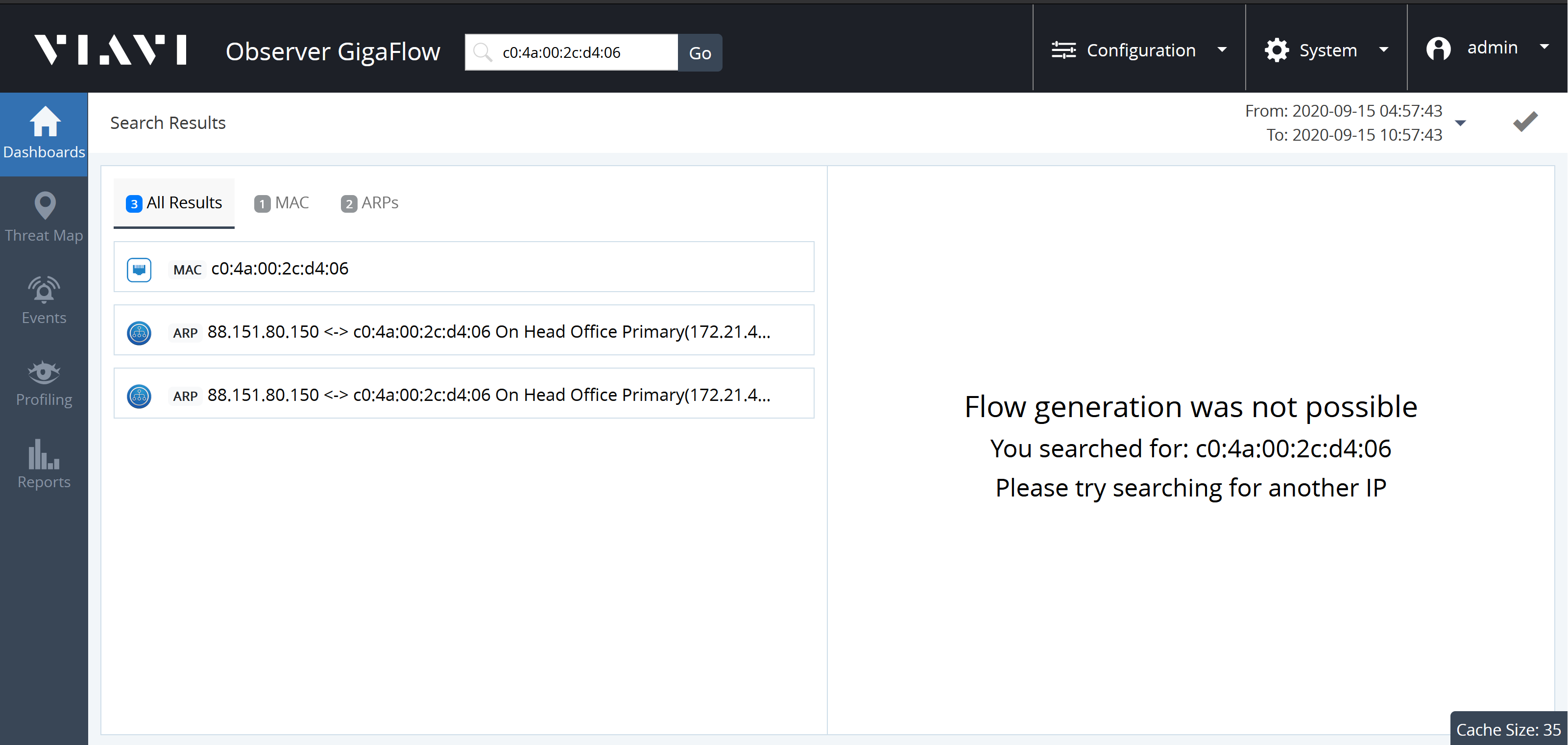

For example, searching for the MAC address c0:4a:00:2c:d4:06 on our demo network:

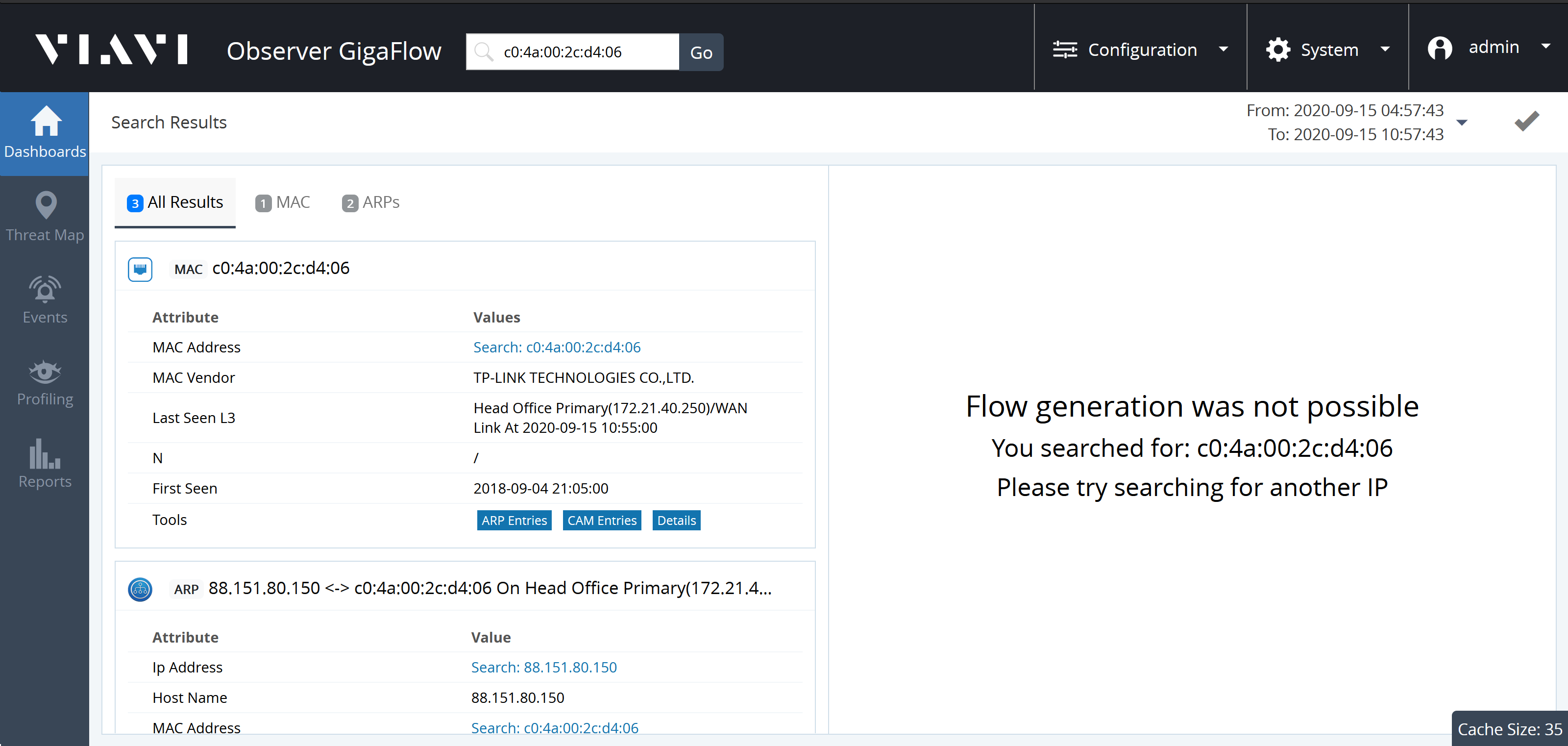

Expanding the MAC panel displays information:

- MAC Address.

- MAC Vendor.

- Last Seen L3: Last seen on which Layer-3 device.

- N: .

- First Seen: date and time first seen on network.

- Tools: ARP Entries, CAM Entries, Details.

Selecting ARPs from the links in Tools brings up the relevant ARP entries. From here, you can click an interface to access more information about the physical interface the device is connected to. The interface with the lowest MAC count is the connected interface.

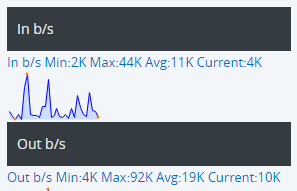

- Click Live Interface in the expanded ARPs panel to view the live in- and out- utilisation of the interface.

- Live View also provides speed, duplex and error count information for the interface.

- Click on Connections in the ARPs panel to see what other devices are connected to the same port.

Blue boxed text indicates any piece of information that allows you to conduct a follow-on search:

- Click on the IP address follow-on search to find out if that address has been seen by any flow devices recently. The IP address search also shows any recent associated secflow data or events, i.e. blacklisted IP addresses and so on.

- Click on the MAC address follow-on search link to find out where the device is physically connected.

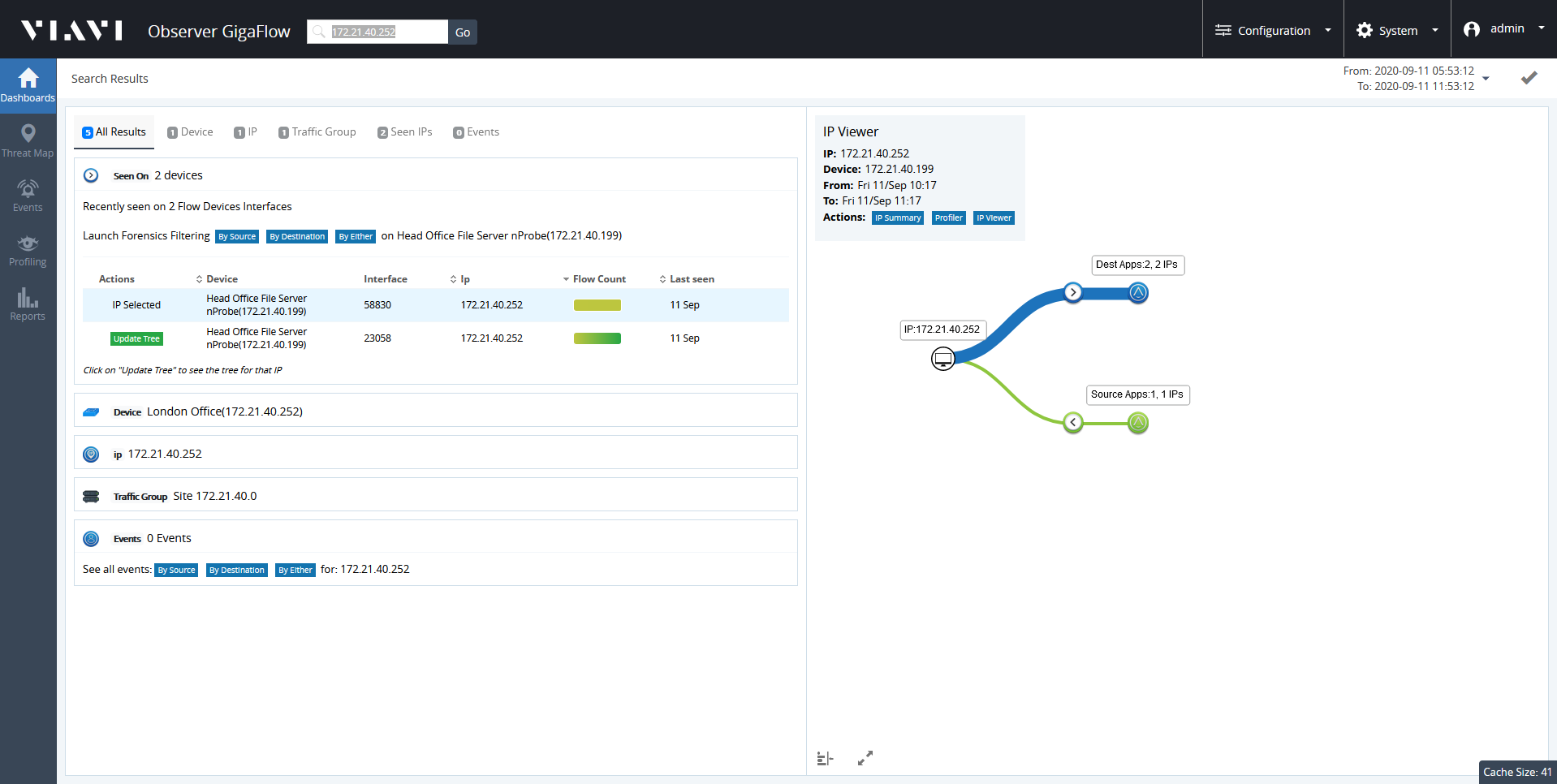

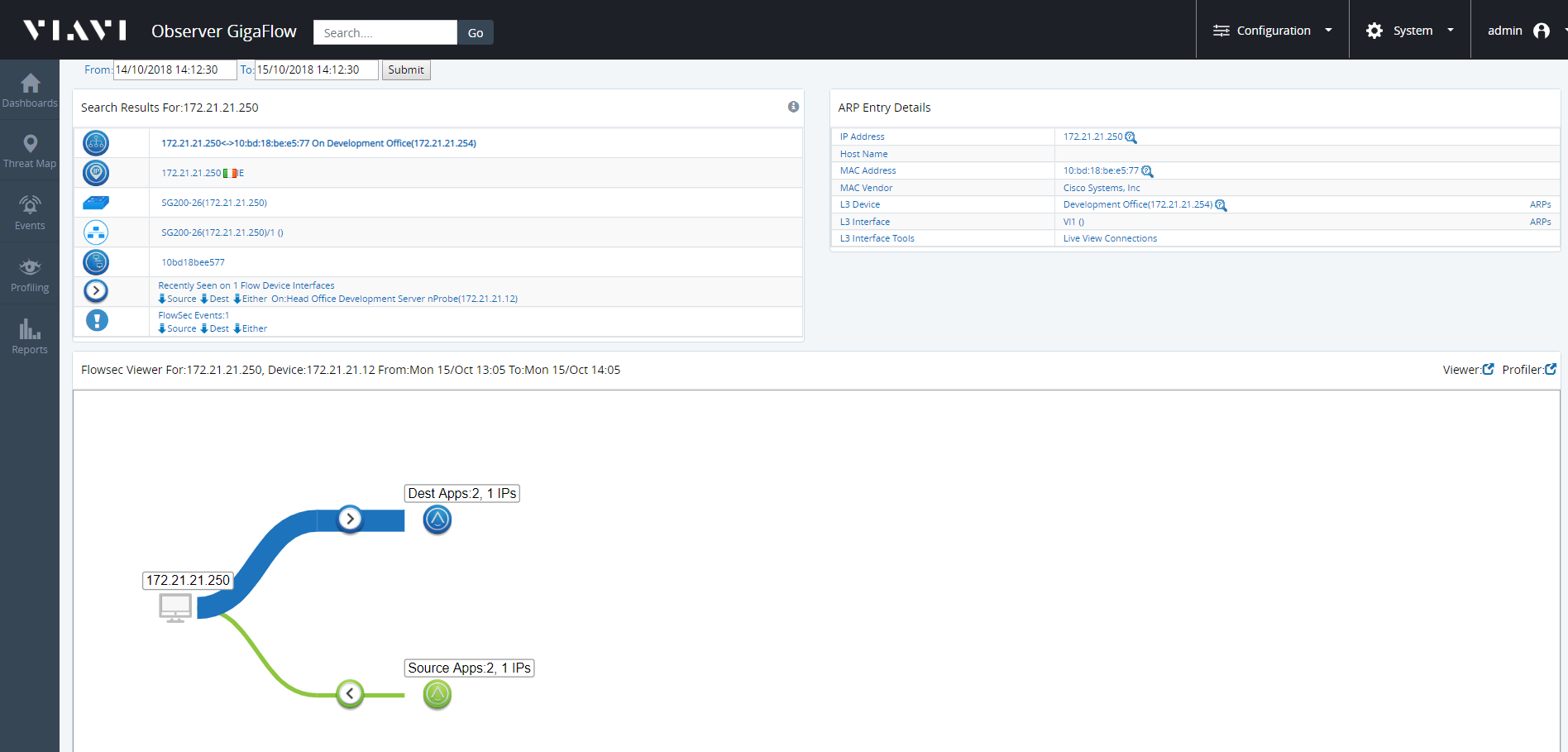

The Treeview, or Graphical Flow Map, will automatically load. This is a visual representation of the in- and out- traffic associated with a selected device.

To explore the Graphical Flow Map:

- Click on any icon to show more information.

- Click on the Destination Apps icon or the Source Apps icon for a breakdown of the applications. You can click on these to expand the tree.

- Move the pointer over icons to display the DNS Information of each device, showing how much traffic has been used.

- Click + to see more devices in a tree of many branches.

- The size of the line linking any two devices is proportional to the traffic volume between them.

- Click on any line in the Treeview table to filter only inbound or only outbound traffic. This will open a report showing traffic for the past two hours.

Search for a Username

- Enter a username, or part of a username, into the search box.

- GigaFlow will tell you if that username, or any variation of it, has been seen on your network.

- Click on any of the search results to display its associated information in the right-hand side panel.

- Click the associated IP address.

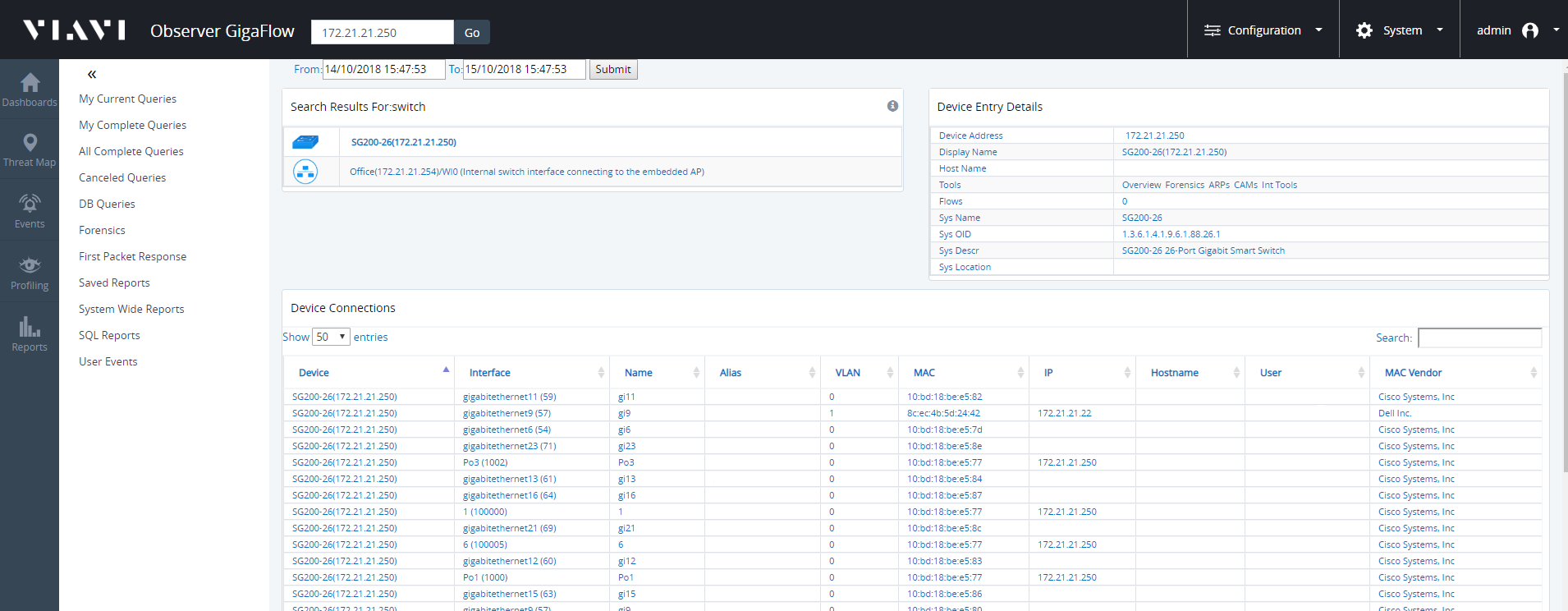

Search for a Network Switch

To search for a specific network switch:

- Enter the switch IP address into the search box.

- Click the icon beside the displayed switch; this will open the Device Connections table.

- The Device Connections table shows connections to any one port on any one VLAN.

- You can filter information by entering an interface, or device etc., into the search bar at the top-right of the table.

To make a follow-on search from a specific device or switch:

- Using switches from previously displayed tables, you can search for any switches of similar origin, e.g containing the same name elements.

- Click on the desired switch; this reopens the Device Connections table.

- You can also search by VLAN.

Apply a Macro (or Script) to a Switchport

Using GigaFlow Search, enter a known IP address associated with switch or the switch name and search:

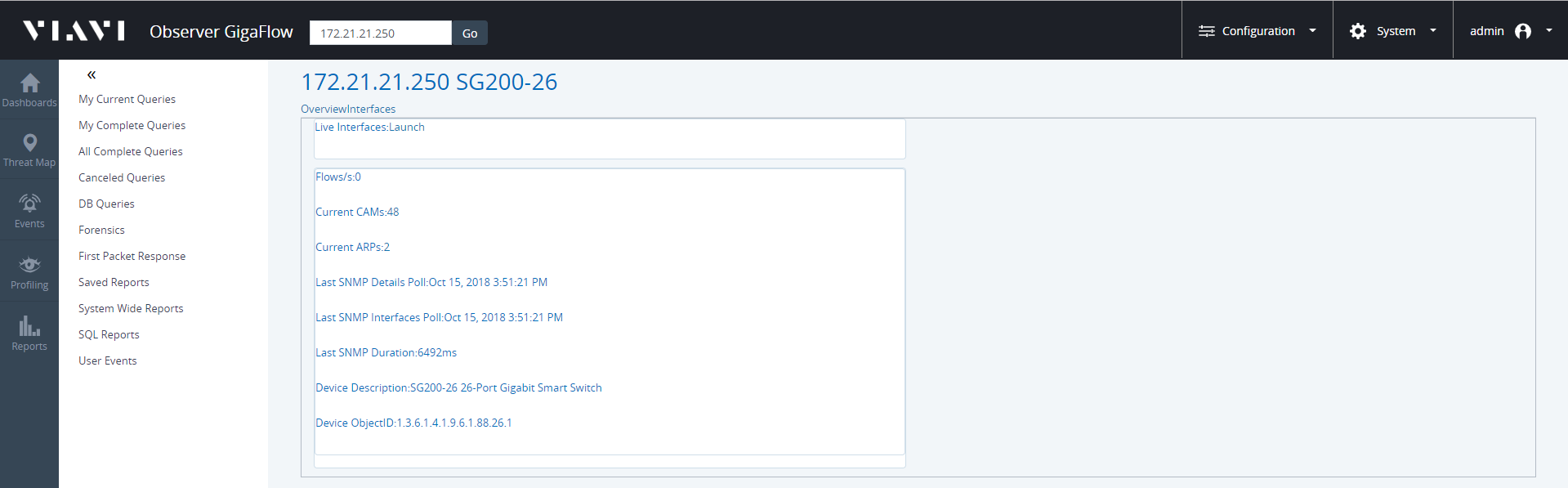

- Click on the the displayed switch name, in this case SG200-26(172.21.21.250).

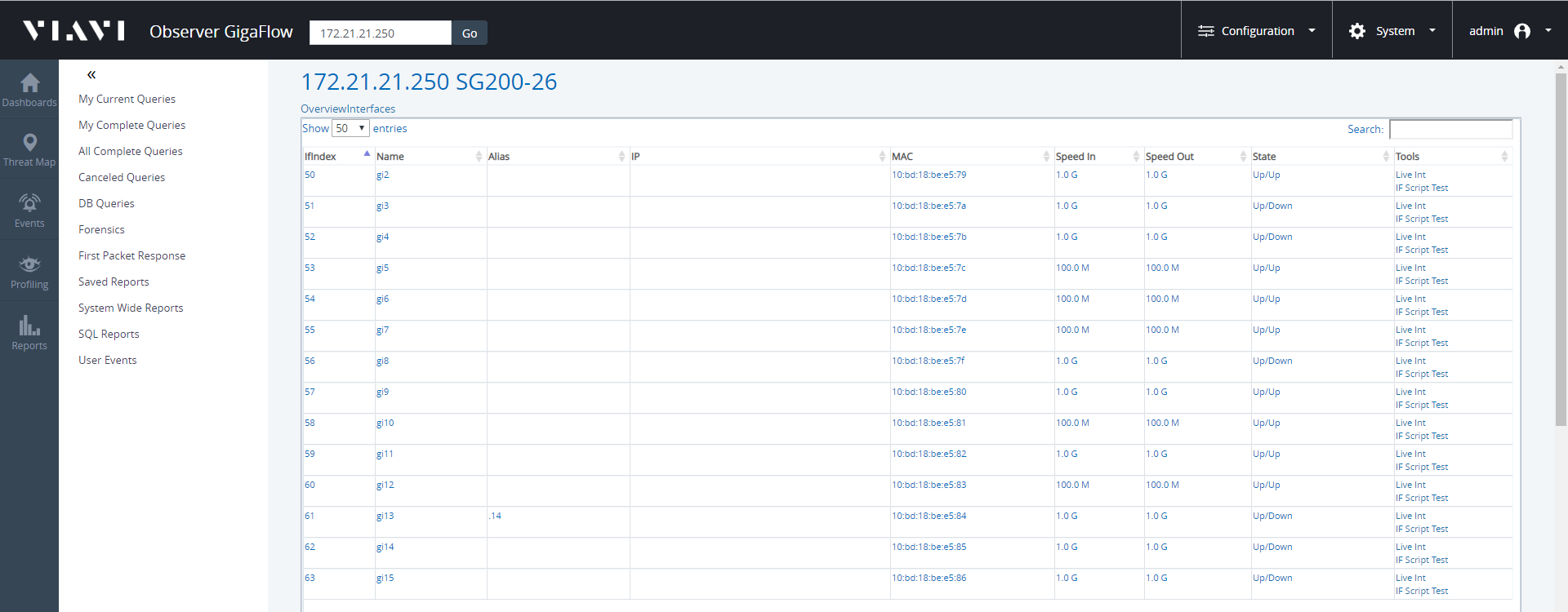

- Click on Int Tools in the Tools section of the right-hand side panel.

- Click on Interfaces next to Overview, at the top of the page.

- Find the interface that the script will run on.

- On that interface click IF Script Test in the last column on the right.

- Add the ticket number into the description field.

- Select the script.

- Click Submit.

- GigaFlow will check that the interface is not an uplink to another switch.

- The script will be applied to the switch.

Diagnostics and Reporting

Explore Bandwidth Usage by Interface and Application

Select a site, device or remote branch directly from the Dashboard > Summary Device and Dashboard > Interface Summary pages. Use GigaFlow Search when the site, device or remote branch is not listed.

After searching by IP address, click on the Device Name in the panel on the left. The information in the panel on the right-hand side of the screen will refresh. Click Overview in the Tools section of this panel.

Figure: Sample results from GigaFlow's search for an internal IP address

In the Summary Interfaces panel, click on any interface to get overview information.

If you are interested in inbound - or download - traffic, select the Drill Down icon  beside the interface name from the Summary Interfaces In panel.

beside the interface name from the Summary Interfaces In panel.

A list of the application traffic, ranked by volume, will be displayed here; this Application Flows report will include details of the internal IP address communicating with this application.

You can view historical flow data by selecting any date or time.

Identify Bandwidth Usage by User

Select a site, device or remote branch directly from the Dashboard > Summary Device and Dashboard > Interface Summary pages. Search using the main GigaFlow Search when the site, device or remote branch is not listed.

After searching by IP address, click on the Device Name in the panel on the left. The information in the panel on the right-hand side of the screen will refresh. Click Overview in the Tools section of this panel.

Figure: Sample results from GigaFlow's search for an internal IP address

In the Summary Interfaces panel, click on any interface to get an overview.

If you are interested in inbound - or download - traffic, select the Drill Down icon  beside the interface name from the Summary Interfaces In panel.

beside the interface name from the Summary Interfaces In panel.

A list of the application traffic, ranked by volume, will be displayed here; this Application Flows report will include details of the internal IP address communicating with this application.

You can view historical flow data by selecting any date or time.

From the Report drop-down menu at the top of the screen, select Application Flows with Users and click submit  .

.

To see all traffic for a particular user, click the Drill Down icon  to the left of that user. Click submit

to the left of that user. Click submit  Forensics check and submit icon. once more.

Forensics check and submit icon. once more.

Use First Packet Response to Understand Application Behaviour

First Packet Response (FPR) is a useful diagnostic tool, allowing you to compare the difference between the first packet time-stamp of a request flow and the first packet time-stamp of the corresponding response flow from a server. By comparing the FPR of a transaction with historical data, you can troubleshoot unusual application performance.

Application performance can be affected by many types of transaction: credit card transactions, DNS transactions, loyalty club reward transactions or any type of hosted transaction.

Ensure that First Packet Response (FPR) is enabled for each application. See Configuration > Server Subnets in the Reference Manual.

Select a site, a device or a remote branch directly from the Dashboard > Summary Device and Dashboard > Interface Summary pages. Access the Forensics report page by clicking the Drill Down icon  in the Summary Devices table.

in the Summary Devices table.

Search using the main GigaFlow Search when the site, device or remote branch is not listed. After searching by IP address, click on the Device Name in the panel on the left. The information in the panel on the right-hand side of the screen will refresh. Click Forensics in the Tools section of this panel.

Figure: Sample results from GigaFlow's search for an internal IP address

The panel on the right is displayed when the IP address is selected in the left-hand side panel.

Whether you arrive via the Dashboard or using GigaFlow Search, the Forensics report page will open with an Application Flows report.

Select Applications from the drop-down Report menu. Click the Submit icon  to refresh the Forensics report page.

to refresh the Forensics report page.

For demonstration purposes, we are looking for credit card related application data not displayed in the most used applications graph. To any application not listed in the top applications, change the view from graph to table.

Select 100 entries.

After identifying the application associated with credit card transactions, click the Forensics Drill Down icon  to the left of the application and choose Selected from the hover option list. Click Submit

to the left of the application and choose Selected from the hover option list. Click Submit  Forensics check and submit icon. again.

Forensics check and submit icon. again.

Select the All Fields Max FPR (First Packet Response) report type from the drop-down menu. Change the view from table to graph and click Submit  .

.

GigaFlow will return the response times for credit card applications in the reporting period. By clicking on the graph or selecting from the calendar, you can view response times over the period. The application response times, along the y-axis, are given in milliseconds.

Determine if Bad Traffic is Affecting Your Network

Click Events in the main menu. GigaFlow will display information about the network events during the reporting period, including:

- A timeline of all events in the reporting period, the Events Graph.

Figure: Events Graph

- Infographic of the number of event types by date and time; this is the Event Types infographic. Circle size indicates the number of events.

- Infographic of the frequency of an event triggered by a particular source host by date and time; this is the Event Source Host(s) infographic. Circle size indicates frequency.

- Infographic of the frequency with which each infrastructure device was affected by an event by date and time, i.e. number of times per time interval; this is the Infrastructure Devices infographic. Circle size indicates frequency.

- Infographic of the frequency of occurence of particular event categories by date and time, i.e. number of times per time interval; this is the Event Categories infographic. Circle size indicates frequency.

Figure: Event Categories infographic

- Infographic of the frequency of an event targetted at a particular host by date and time, i.e. number of times per time interval; this is the Event Target Host(s) infographic. Circle size indicates frequency.

- Infographic of the frequency of an event by the measure of confidence in its identification, i.e. your confidence in the completeness and accuracy of the blacklist that the IP address was found in, and the severity of the threat to your network infrastructure, i.e. number of times per time interval; this is the Confidence & Severity infographic. Circle size indicates frequency.

Figure: Confidence & Severity infographic

Confidence & Severity Infographic.

Confidence & Severity Infographic.

By clicking once on any legend item, you will be taken to a detailed report, e.g. detailed reports for each Event Type, Source Host, Infrastructure Device, Event Category and Target Host.

Identify any internal IP address from your network that appears on the Event Target Host(s) list. If an internal IP address appears on the list, the associated device is infected and communicating with a known threat on the internet. Click on the IP address to find out who it is communicating with.

Create a Script

See System > Event Scripts and the GigaFlow Wiki for more.

Create a Site